Difference between revisions of "Main Page"

(update comment for calculation of number of explanations) |

|||

| (4 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | __NOTOC__ | + | __NOTOC__{{DISPLAYTITLE:explain xkcd}} |

| − | {{DISPLAYTITLE:explain xkcd}} | ||

| − | |||

<center> | <center> | ||

| − | < | + | <font size=5px>''Welcome to the '''explain [[xkcd]]''' wiki!''</font> |

We have collaboratively explained [[:Category:Comics|'''{{#expr:{{PAGESINCAT:Comics}}-9}}''' xkcd comics]], | We have collaboratively explained [[:Category:Comics|'''{{#expr:{{PAGESINCAT:Comics}}-9}}''' xkcd comics]], | ||

| Line 12: | Line 10: | ||

remain. '''[[Help:How to add a new comic explanation|Add yours]]''' while there's a chance! | remain. '''[[Help:How to add a new comic explanation|Add yours]]''' while there's a chance! | ||

</center> | </center> | ||

| − | |||

== Latest comic == | == Latest comic == | ||

| − | |||

<div style="border:1px solid grey; background:#eee; padding:1em;"> | <div style="border:1px solid grey; background:#eee; padding:1em;"> | ||

<span style="float:right;">[[{{LATESTCOMIC}}|'''Go to this comic explanation''']]</span> | <span style="float:right;">[[{{LATESTCOMIC}}|'''Go to this comic explanation''']]</span> | ||

| Line 30: | Line 26: | ||

* If you're new to wikis like this, take a look at these help pages describing [[mw:Help:Navigation|how to navigate]] the wiki, and [[mw:Help:Editing pages|how to edit]] pages. | * If you're new to wikis like this, take a look at these help pages describing [[mw:Help:Navigation|how to navigate]] the wiki, and [[mw:Help:Editing pages|how to edit]] pages. | ||

| − | * Discussion about various parts of the wiki is going on at [[Explain XKCD:Community portal]]. | + | * Discussion about various parts of the wiki is going on at [[Explain XKCD:Community portal]]. Share your 2¢! |

* [[List of all comics]] contains a complete table of all xkcd comics so far and the corresponding explanations. The red links ([[like this]]) are missing explanations. Feel free to help out by creating them! [[Help:How to add a new comic explanation|Here's how]]. | * [[List of all comics]] contains a complete table of all xkcd comics so far and the corresponding explanations. The red links ([[like this]]) are missing explanations. Feel free to help out by creating them! [[Help:How to add a new comic explanation|Here's how]]. | ||

== Rules == | == Rules == | ||

| − | Don't be a jerk. | + | Don't be a jerk. There are a lot of comics that don't have set in stone explanations; feel free to put multiple interpretations in the wiki page for each comic. |

If you want to talk about a specific comic, use its discussion page. | If you want to talk about a specific comic, use its discussion page. | ||

Revision as of 05:35, 4 March 2013

Welcome to the explain xkcd wiki!

We have collaboratively explained 6 xkcd comics, and only 2915 (48583%) remain. Add yours while there's a chance!

Latest comic

Explanation

| |

This explanation may be incomplete or incorrect: Created by a TORNADO WORLD CHAMPIONSHIP WINNER - Please change this comment when editing this page. Do NOT delete this tag too soon. |

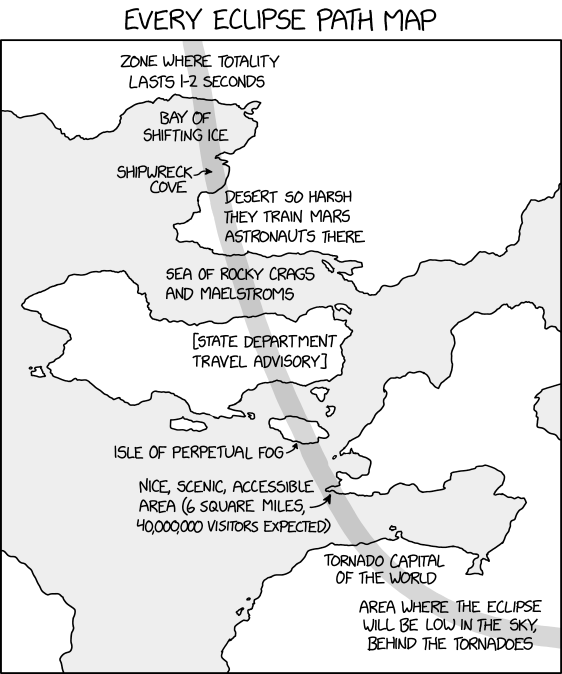

A total solar eclipse occurred on April 8, 2024 in North America, ten days before this comic. This comic comments on the fact that most solar eclipses happen on territories not easily reachable by humans, places with weather conditions that make viewing the eclipse less appealing, like cloudy skies (mentioned previously in 2915: Eclipse Clouds and 2917: Types of Eclipse Photo), fog, or tornadoes (also a recurring subject on xkcd), or areas that experience only a short period of totality.

| Zone label | Geography | Suitability for observation |

|---|---|---|

| Zone where totality lasts 1-2 seconds | Land | No stated issues for visiting, but rendered all too brief an experience for astronomical reasons. |

| Bay of shifting ice | Water (part frozen) |

Open water might make this location accessible by boated observers. Solid ice might grant observers ready access by skidoo, ski and/or skid-plane. Shifting ice causes problems for all these modes of access. |

| Shipwreck cove | Water/Coast | The name describes the likely impediment to any boat access. |

| Desert so harsh they train Mars astronauts there | Land (peninsula) |

Implied inhospitable, and probably a lack of any normal transport/accommodation infrastructure. |

| Sea of rocky crags and maelstroms | Water (straits) |

Yet more risk of nautical hazards, including strong rotating currents. Possibly a nod to Scylla and Charbydis from The Odyssey. |

| [State department travel advisory] | Island | Unknown risk, but probably involves some form of political instability, war, or major health hazard that makes unnecessary visits highly inadvisable. May also be a result of adverse weather effects. Or perhaps all of these at the same time. |

| Isle of perpetual fog | Island (inc. littoral zones?) |

Meteorologically unfortunate (ground visibility; may not fully obscure the skyward view). |

| Nice, scenic, accessible area (6 square miles, 40,000,000 visitors expected) | Land | Apparently ideal in all respects. Except for the crowds. (Would entail up to three people for every square metre, even before accounting for the existing population and obstructions, as well as a high probability of travel congestion.) |

| Tornado capital of the world | Land | Meteorologically unfortunate (frequent disruptive wind vortices, and cloud cover likely). |

| Area where the eclipse will be low in the sky, behind the tornadoes | Land | Astronomically disadvantageous, with added complications from the neighbouring weather system. |

The title text mentions the solar eclipse of February 2063, and claims it will only be visible from the Arctic, though in fact this annular eclipse will traverse through the Indian Ocean. The eclipse in the comic would supposedly happen when the Sun would be below the horizon, which is a contradiction in terms, since an eclipse is only an eclipse from the standpoint of the viewer — it is equivalent to saying that the eclipse is not visible from that location, but is visible from a location over the horizon, at a point that is at the other end of a direct straight line through the Earth that is directed 'down' towards the unrisen Sun and Moon. It then jokingly suggests that a giant chasm could open up between the location being considered and the location from where it would be visible, allowing people to view it. If this did happen, the chasm itself would likely eclipse the eclipse as a spectacle. In most cases, it would also likely cause severely detrimental effects (for example, magma eruptions, tsunamis, etc.), and would therefore not be considered 'lucky' by most people, despite the small and short-term benefit of being able to view an eclipse from a previously unsuitable location.

Note

The Novaya Zemlya effect can make it possible to observe a solar eclipse when the Sun is below the horizon at the poles during certain weather conditions. Also called a "polar mirage", the effect is when an atmospheric inversion ducts sunlight along the surface of the Earth for distances up to 250 miles (400 km), which would make the Sun appear 5° higher in the sky than it actually is. This appears to be the rare situation where Randall was unaware of an obscure scientific phenomenon that would contribute to a joke.

Transcript

| |

This transcript is incomplete. Please help editing it! Thanks. |

- Every eclipse path map

- [A grey band representing the totality path of an eclipse travels along the map across several labels. Labels along the path from top to bottom:]

- [On land] Zone where totality lasts 1-2 seconds

- [On water] Bay of shifting ice

- [On water] Shipwreck cove

- [On land] Desert so harsh they train Mars astronauts there

- [On water] Sea of rocky crags and maelstorms

- [On an island; label in square brackets] State department travel advisory

- [On an island] Isle of perpetual fog

- [On small part of a peninsula] Nice, scenic, accessible area (6 square miles, 40,000,000 visitors expected)

- [On land] Tornado capital of the world

- [On land] Area where the eclipse will be low in the sky, behind the tornadoes

- [Title Text] Okay, this eclipse will only be visible from the Arctic in February 2063, when the sun is below the horizon, BUT if we get lucky and a gigantic chasm opens in the Earth in just the right spot...

Is this out of date? .

New here?

You can read a brief introduction about this wiki at explain xkcd. Feel free to sign up for an account and contribute to the wiki! We need explanations for comics, characters, themes, memes and everything in between. If it is referenced in an xkcd web comic, it should be here.

- If you're new to wikis like this, take a look at these help pages describing how to navigate the wiki, and how to edit pages.

- Discussion about various parts of the wiki is going on at Explain XKCD:Community portal. Share your 2¢!

- List of all comics contains a complete table of all xkcd comics so far and the corresponding explanations. The red links (like this) are missing explanations. Feel free to help out by creating them! Here's how.

Rules

Don't be a jerk. There are a lot of comics that don't have set in stone explanations; feel free to put multiple interpretations in the wiki page for each comic.

If you want to talk about a specific comic, use its discussion page.

Please only submit material directly related to —and helping everyone better understand— xkcd... and of course only submit material that can legally be posted (and freely edited.) Off-topic or other inappropriate content is subject to removal or modification at admin discretion, and users who repeatedly post such content will be blocked.

If you need assistance from an admin, feel free to leave a message on their personal discussion page. The list of admins is here.