2582: Data Trap

| Data Trap |

Title text: It's important to make sure your analysis destroys as much information as it produces. |

Explanation

| |

This explanation may be incomplete or incorrect: Created by a SURPLUS DATA-CREATING BOT - Please change this comment when editing this page. Do NOT delete this tag too soon. If you can address this issue, please edit the page! Thanks. |

The title text proposes an alternate solution: destructive analysis. It is important that the method chosen to analyze the data destroys as much information as it created, thus keeping the total amount of data constant. This expands on the concept of not having a surplus of data, suggesting that any analysis should destroy as much data as it produces. This would make data constant in quantity or in an equilibrium; of course, data doesn't actually have this limitation,[citation needed] and the user can create as much data as is needed or desired.

In the quantum world information can neither be destroyed or created; see the no-hiding theorem, for instance.

Transcript

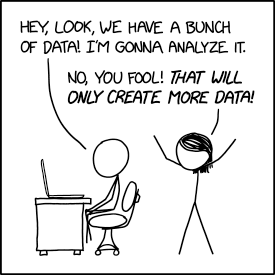

- [Cueball is sitting in an office chair at his desk, hands on his knees, looking at his laptop. Megan stands behind him, with her arms raised to the sides and above her head.]

- Cueball: Hey, look, we have a bunch of data! I'm gonna analyze it.

- Megan: No, you fool! That will only create more data!

Discussion

Created a barebones explanation — please expand & clarify :) Szeth Pancakes (talk) 06:40, 17 February 2022 (UTC)

- I'm sure we could get into black holes (hairy or otherwise) and entropy and/or information-entropy.

- But the feeling I get is that Data begets data (by analysis of the original data) and then the data that is the analysis plus the original data begs to in turn be meta-analysed. Which then gives an additional clump of data... Trapping everyone in a potential N-meta-analysis loop.

- (For those wondering how the entropy of this system works, it's the additional state info of the successive analysers, like the sunlight shone onto the biosphere, that prevents the system going 'stale' and degrading to successively shorter summaries that add nothing. You get to comment upon the prior analysis's choice of trend metric, etc...)

- Well, that's my take, but I wouldn't know how to authoritatively - and succinctly - put that into the explanation. I could be entirely wrong, as well. 172.70.90.173 09:34, 17 February 2022 (UTC)

- You're on to something - it's about information vs data. Cueball wants to analyse the data to get hold of information that's buried somewhere in it. Usually, in terms of bits, that information is only a tiny fraction compared to the volume of data. Think of gigabytes of data giving rise to an insightful scientific publication that's only a few tens of kilobytes long. Megan seems to think that this is just a few more kB of "data" to be added to the pile, without realising that we've just gone from a jumble of confused bits to actual understanding. Of course, in doing his analysis, Cueball has added some information of his own, to wit an explanation of how he did the analysis and (implicitly) why he chose that tack. Which would make the new pile of data ripe for meta-analysis as you say.162.158.233.105 10:27, 17 February 2022 (UTC)

Megan is NOT "Thinking Cueball implies he wants to get rid of some of the data . . . ."! I have NO idea where that implication lies. 172.70.130.153 10:16, 17 February 2022 (UTC)

Done properly, analysis actually reduces the amount of information (not data) that you need to consider--that's the whole point. Rather than trying to comprehend thousands or millions of numbers, you can (for instance) reduce it to an average, or a correlation, or some other single number. Megan is missing the whole point of analyzing data. Nitpicking (talk) 12:49, 17 February 2022 (UTC)

- I think the comic is deliberately ignoring the fact that once you analyze the data, you don't have to think much about the original data. So this reduced information is added to the original information, and data just accumulates without bound. And even when you do stop considering the original data, it's not usually discarded, you keep it in case someone wants to verify your analysis, you want to analyze it in different ways, or combine it with new raw data. Excess data could actually have been a problem in the old days when it was stored on paper -- electronic storage his mitigated this; a single PC can now hold data than one would have needed warehouses in the past. Barmar (talk) 15:15, 17 February 2022 (UTC)

I fleshed out the explanation, adding explanation for the reason for data analysis and the apparent data equilibrium that's being implied. Thanks also to Kynde for adding some more clarification in that new section. KirbyDude25 (talk) 13:40, 17 February 2022 (UTC)

Pretty sure this comic is another example of literalist perversion of the title. There are many things called Data Traps, but they tend to fall into two categories: Collection of data (digital, physical, benign, etc) where data is being accumulated for retrieval/use, and "gotcha's", ie possible mistakes in collecting, handling, or processing of data). The comic seems to be a play on the intersection of the two 108.162.246.62 19:56, 17 February 2022 (UTC)

Similar to https://xkcd.com/2086/ imo. 172.70.35.70 00:28, 18 February 2022 (UTC)

This reminds me of "The sixth sally, or how Trurl and Klapaucius created a demon of the second kind to defeat the pirate Pugg" from Stanisław Lem's Cyberiad (https://en.wikipedia.org/wiki/The_Cyberiad). Protagonists got caught by an information collecting pirate Pugg and escaped after providing him access to all existing information, which stunned the pirate. Tkopec (talk) 11:43, 18 February 2022 (UTC)

- Oh yeah! That's funny, I read that book a few months ago. 172.70.38.69 15:37, 18 February 2022 (UTC)