2560: Confounding Variables

| Confounding Variables |

Title text: You can find a perfect correlation if you just control for the residual. |

Explanation[edit]

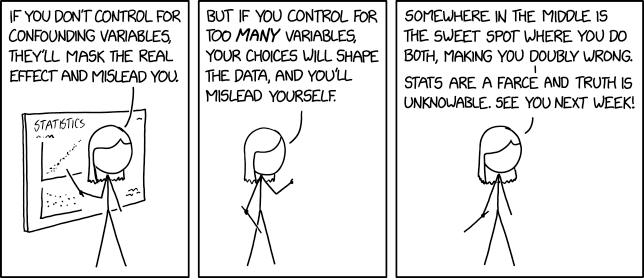

Miss Lenhart is teaching a course which apparently covers at least an overview of statistics.

In statistics, a confounding variable is a third variable that's related to the independent variable, and also causally related to the dependent variable. An example is that you see a correlation between sunburn rates and ice cream consumption; the confounding variable is temperature: high temperatures cause people go out in the sun and get burned more, and also eat more ice cream.

One way to control for a confounding variable by restricting your data-set to samples with the same value of the confounding variable. But if you do this too much, your choice of that "same value" can produce results that don't generalize. Common examples of this in medical testing are using subjects of the same sex or race -- the results may only be valid for that sex/race, not for all subjects.

There can also often be multiple confounding variables. It may be difficult to control for all of them without narrowing down your data-set so much that it's not useful. So you have to choose which variables to control for, and this choice biases your results.

In the final panel, Miss Lenhart suggests a sweet spot in the middle, where both confounding variables and your control impact the end result, thus making you "doubly wrong". "Doubly wrong" result would simultaneously display wrong correlations (not enough of controlled variables) and be too narrow to be useful (too many controlled variables), thus the 'worst of both worlds'.

Finally she admits that no matter what you do the results will be misleading, so statistics are useless. This would seem to be an unexpected declaration from someone supposedly trying to actually teach statistics[citation needed], and expecting her students to continue the course. Though there is a possibility that she is not there to purely educate this subject, but is instead running a course with a different purpose and it just happens that this week concluded with this particular targeted critique.

In the title text, the residual refers to the difference between any particular data point and the graph that's supposed to describe the overall relationship. The collection of all residuals is used to determine how well the line fits the data. If you control for this by including a variable that perfectly matches the discrepancies between the predicted and actual outcomes, you would have a perfectly-fitting model: however, it is nigh impossible (especially in the social and behavioral sciences) to find a "final variable" that perfectly provides all the "missing pieces" of the prediction model.

Transcript[edit]

- [Miss Lenhart is holding a pointer and pointing at a board with a large heading with some unreadable text beneath it. Below this there are two graphs with scattered points. In the top graph the points are almost on a straight increasing line. In the bottom the data points seem to be more random. Mrs Lenhart covers most of the right side of the board, but there is more unreadable text to the right of her.]

- Miss Lenhart: If you don't control for confounding variables, they'll mask the real effect and mislead you.

- Heading: Statistics

- [Miss Lenhart is holding the pointer down in one hand while she holds a finger in the air with the other hand. The board is no longer shown.]

- Miss Lenhart: But if you control for too many variables, your choices will shape the data and you'll mislead yourself.

- [Miss Lenhart is holding both arms down, still with the pointer in her hand.]

- Miss Lenhart: Somewhere in the middle is the sweet spot where you do both, making you doubly wrong.

- Miss Lenhart: Stats are a farce and truth is unknowable. See you next week!

Discussion

This is the earliest in the day that I can recall a comic being published in a long time. It's not even noon ET yet. Barmar (talk) 16:39, 27 December 2021 (UTC)

This explanation will not be complete until someone explains the title text. I assume controlling for the residual will lead to a null result, but I don't know statistics well enough to know. 172.70.110.171 17:10, 27 December 2021 (UTC)

"Stats are a farce and the truth is unknowable." She jests, but... 172.70.178.25 18:31, 27 December 2021 (UTC)

- Many people have made similar observations over the centuries [1] Seebert (talk) 16:31, 28 December 2021 (UTC)

- Stats provide answers, but not questions - that's what my professor used to say 141.101.104.14 12:04, 30 December 2021 (UTC)

"(Why was the word "remit" used and why was it linked to Mythbusters? Both were unnecessarily confusing.)"... Well, "remit" ≈ "purpose" as I used it (Wiktionary, Noun section, item 1: "(chiefly Britain) Terms of reference; set of responsibilities; scope. quotations" - seeing that it's apparently British, and the remit scope of most of the verb forms, I now understand your confusion). And the suggestion was that this was not a (bad/offputting?) Statistics course but a course that "mythbusted" a range of subjects, somewhat like Every Major's Terrible, that we catch just as Statistics in particular gets a page or so of short shrift about. 172.70.85.73 23:01, 29 December 2021 (UTC)

Her argument is exceedingly weak. If you simply want to note a correlation, there's no need to control for any confounding variables. If, on the other hand, you want to prove a causative relationship, there is simply no way to do that by controlling for any set of potentially confounding variables - but contrary to her assertion, this doesn't mean that stats are a farce and the truth is unknowable, it just means that a causative relationship can only be established experimentally. To establish a causative relationship, you don't need to control for any confounding variables, you just randomize. Example in a clinical trial, patients are randomly assigned to the treatment group or the placebo group. There's no need to control for anything because random chance would distribute patients with any potential confounding variable throughout both groups. You use appropriate statistical significance tests to make sure that this effect of random chance is sufficient to be reasonably sure of the results, and you have the truth. The argument this individual is making is based on misunderstandings of statistical principles prevalent in popular culture. 172.68.174.150 18:46, 30 August 2023 (UTC)

- Her assertion is bad, but that's the humour of the comic.

- Meanwhile, a clinical trial 'deconfounds' by careful balancing of those accepted into the study (and enough in the cohorts to make randomised active/control/etc splitting unlikely to create unbalance in comparison), enough to make it not significantly possible that chance differences in each group can explain any effect seen.

- But a big problem (not really dealt with above) has been that confounding variables have been squashed by, e.g., not enrolling women to a study because of the complication of their monthly biological cycles interacting with the treatment... leaving the study biased by only being analysed in the context of male endochronic systems.

- Then there's also the tendency for Phase III ('first in human') to have ended up with overwhelmingly young, male and (often, given the catchment advertised to) white volunteers, which colours how it progresses onwards into the theraputic use phases (for which the demographic should be more self-balancing to than merely looking for students who are cash-poor but relatively time rich, in the worst cases), but this will all vary with how much past pitfalls have been identified and learnt from.

- Of course, the tutor here isn't looking at purely phramcological stats (that's not even hinted at), and the careful split of (preaccumulated) data into training and testing sets is perhaps the best way to emulate the same A/B or A/control testing regimes when you aren't able to allocate a different 'treatment' in advance of the data collection. For which you maybe need to have twice as many datapoints as you might if you were happy to just effect-fish within the entire original dataset.

- ...but stats is complicated. Easy to overuse ("lies, damn lies and statistics"), easy to dismiss (as parodied here). A mere few paragraphs of explanation is always going to be lacking in nuance (I'd prefer to add much more detail to this reply, ideally, but it'd probably be unreadable), and the portion of comic-monologue we see is deliberately skimming over all the issues.

- Plus we don't get to see the supplementary materials, or exactly what her diagrams are, nor anything of whatever lecture led up to this short summary, e.g. in what context she speaks (statistical theology?) Which doesn't really matter, in the context of the joke, and wouldn't help it anyway. 172.71.242.77 21:04, 30 August 2023 (UTC)

Add comment

Add comment