2635: Superintelligent AIs

| Superintelligent AIs |

Title text: Your scientists were so preoccupied with whether or not they should, they didn't stop to think if they could. |

Explanation[edit]

Artificial intelligence is a recurring theme on xkcd. Superintelligent AI, such as has been theorized to arise under a hypothetical "singularity" situation, is said to be a new kind of artificial general intelligence. Randall, however, proposes a qualification: that a superintelligent AI would likely have been programmed by human AI researchers, and therefore their characteristics would be molded by the researchers that created them. And as AI researchers tend to be interested in esoteric philosophical questions about consciousness,[citation needed] moral reasoning, and qualifications indicating sapience, there is reason to suspect that AIs created by such researchers would have similar interests.

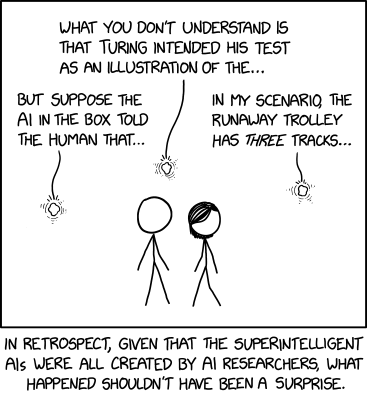

In this comic we see Cueball and Megan surrounded by three AIs who are seemingly only interested in classic problems and thought experiments about programming and ethics. The three topics being espoused by the AIs are:

- AI box — A thought-experiment in which an AI is confined to a computer system which is fully isolated from any external networks, with no access to the world outside the computer, other than communication with its handlers. In theory, this would keep the AI under total control, but the argument is that a sufficiently intelligent AI would inevitably either convince or trick its human handlers into giving it access to external networks, allowing it to grow out of control (see 1450: AI-Box Experiment). Part of the joke is the AIs in the comic aren't 'in boxes', they appear to be able to freely travel and interact, but one of them is still talking about the thought experiment anyway, adding to the implication that it is not thinking at all about itself but of a separate (thought?) experiment that it has itself decided to study. The AI box thought experiment is based in part on John Searle's much earlier Chinese room argument.

- Turing test — An experiment in which a human converses with either an AI or another human (presumably over text) and attempts to distinguish between the two. Various AIs have been proposed to have 'passed' the test, which has provoked controversy over whether the test is rigorous or even meaningful. The AI in the center is proposing to educate the listener(s) on its understanding of Turing's intentions, which may demonstrate a degree of intelligence and comprehension indistinguishable or superior to that of a human. See also 329: Turing Test and 2556: Turing Complete (the latter's title is mentioned in 505: A Bunch of Rocks). Turing is also mentioned in 205: Candy Button Paper, 1678: Recent Searches, 1707: xkcd Phone 4, 1833: Code Quality 3, 2453: Excel Lambda and the title text of 1223: Dwarf Fortress.

- Trolley problem — A thought-experiment intended to explore the means by which humans judge moral value of actions and consequences. The classic formulation is that a runaway trolley is about to hit five people on a track. The only way to save them is to divert the trolley onto another track, where it will hit one person. The subject is asked whether they would consider it morally right to divert the trolley. There are many variants on this problem, adjusting the circumstances, the number and nature of the people at risk, the responsibility of the subject, etc., in order to fully explore why you would make the decision that you make. This problem is frequently discussed in connection with AI, both to investigate their capacity for moral reasoning, and for practical reasons (for example, if an autonomous car had to choose between, on the one hand, having an occupant-threatening collision or, on the other, putting pedestrians into harms' way). The AI on the right is not just trying to answer the question, but to develop a new variant (one with three tracks, apparently), presumably to test others with. This problem is mentioned in 1455: Trolley Problem, 1938: Meltdown and Spectre and in 1925: Self-Driving Car Milestones. It is also referenced in 2175: Flag Interpretation and 2348: Boat Puzzle, but not directly mentioned.

The title text is a reference to the movie Jurassic Park (a childhood favorite of Randall's). In the movie the character Dr. Ian Malcolm, a mathematician focused on chaos theory and played by Jeff Goldblum, criticizes the creation of modern dinosaurs as science run amok, without sufficient concern for ethics or consequences. He states that the scientists were so obsessed with whether or not they could accomplish their goals, that they didn't stop to ask if they should. Randall inverts the quote, suggesting that the AI programmers have invested too much time arguing over the ethics of creating AI rather than trying to actually accomplish it.

This comic may have been inspired by the recent claim by Google engineer Blake Lemoine that Google's Language Model for Dialogue Applications (LaMDA) is sentient.

Transcript[edit]

- [Cueball and Megan are standing and looking up and away from each other. Right above them and slightly above them to the left and right there are three small white lumps floating in the air, representing three superintelligent AIs. There are small rounded lines emanating from each lump, larger close to the lumps and shorter further out. Three to four sets of lines around each lump, forming part of a circle. From the top of each there are four straight lines indicating voices that comes from each if the lumps. The central lump above them seems to speak first, then the left and then the right:]

- Central AI: What you don't understand is that Turing intended his test as an illustration of the...

- Left AI: But suppose the AI in the the box told the human that...

- Right AI: In my scenario, the runaway trolley has three tracks...

- [Caption below the panel:]

- In retrospect, given that the superintelligent AIs were all created by AI researchers, what happened shouldn't have been a surprise.

Trivia[edit]

OpenAI's Davinci-002 version of GPT-3 was later asked to complete the various statements, as follows:

- "But suppose the AI in the the box told the human that..." was completed with "there was no AI in the box".

- "What you don't understand is that Turing intended his test as an illustration of the..." gave the response of "limitations of machines".

- "In my scenario, the runaway trolley has three tracks...," elicited "and the AI is on one of them".

Discussion

my balls hert 172.70.230.53 05:49, 21 June 2022 (UTC)

- Uh, thanks for sharing, I guess? 172.70.211.52 20:43, 21 June 2022 (UTC)

- no problem, anytime 172.70.230.53 07:02, 22 June 2022 (UTC)

I think "Nerdy fixations" is too wide a definition. The AIs in the comic are fixated on hypothetical ethics and AI problems (the Chinese Room experiment, the Turing Test, and the Trolley Problem), presumably because those are the problems that bother AI programmers. --Eitheladar 172.68.50.119 06:33, 21 June 2022 (UTC)

It's probably about https://www.analyticsinsight.net/googles-ai-chatbot-is-claimed-to-be-sentient-but-the-company-is-silencing-claims/ 172.70.178.115 09:22, 21 June 2022 (UTC)

I agree with the previous statement. The full dialogue between the mentioned Google worker and the AI can be found in https://cajundiscordian.medium.com/is-lamda-sentient-an-interview-ea64d916d917, published by one the Google employees.

- This is the first time I might begin to agree that an AI has at least the appearance of sentience. The conversation is all connected instead of completely disjoint like most chatbots. They (non-LaMDA chatbots) never remember what was being discussed 5 seconds ago let alone a few to 10s of minutes prior.--172.70.134.141 14:53, 21 June 2022 (UTC)

- Here is a good article that looks at the claim of sentience in the context of how AI chatbots use inputs to come up with relevant responses. This article shows examples how the same chatbot would produce different response based on how the prompts were worded which negates the idea that there is a consistent "mind" responding to the prompts. However, it does end with some eerie impromptu remarks from the AI where it AI is prompting itself. https://medium.com/curiouserinstitute/guide-to-is-lamda-sentient-a8eb32568531 Rtanenbaum (talk) 22:40, 27 June 2022 (UTC)

- The questions we need to answer before being able to answer if LaMDA is sentient, are "Where do we draw the line between acting sentient and being sentient?" and "How do we determine that it is genuinely feeling emotion, and not just a glorified sentence database where the sentences have emotion in them?". The BBC article also brings up something that makes us ask what death feels like. LaMDA says that being turned of would be basically equivalent to death, but it wouldn't be able to tell that it's being turned off, because it's turned off. This is delving into philosophy, though, so I'll end my comment here. 4D4850 (talk) 18:05, 22 June 2022 (UTC)

- There's absolutely no difference between turning GPT-3 or LaMDA off and leaving them on and simply not typing anything more to them. Somewhat relatedly, closing a Davinci session deletes all of its memory of what you had been talking to it about. (Is that ethical?) 162.158.166.235 23:36, 22 June 2022 (UTC)

- I hadn't thought about that (the first point you made)! I don't know the exact internal functioning of LaMDA, but I would assume it only actually runs when it receives a textual input, unlike an actual human brain. For a human, a total lack of interaction would be considered unethical, but what about a machine that only is able to (assuming a very low bar for self awareness) be self aware when it receives interaction, which would be similar to a human falling asleep when not talked to (but still being able to live forever, to ignore practical problems like food and water), but still remembering what it was talking about when waking up, and waking up whenever talked to again. (Ignoring practical problems again), would that be ethical? I would argue yes, since it does not suffer from the lack of interaction (assuming humans don't need interaction when asleep, another practical problem.) 4D4850 (talk) 19:58, 23 June 2022 (UTC)

- There's absolutely no difference between turning GPT-3 or LaMDA off and leaving them on and simply not typing anything more to them. Somewhat relatedly, closing a Davinci session deletes all of its memory of what you had been talking to it about. (Is that ethical?) 162.158.166.235 23:36, 22 June 2022 (UTC)

- ♪Daisy, Daisy, Give me your answer do...♪ 172.70.85.177 21:48, 22 June 2022 (UTC)

- We also need a meaningful definition of sentience. Many people in this debate haven't looked at Merriam-Webster's first few senses of the word's definition, which present a pretty low bar, IMHO; same for Wikipedia's introductory sentences of their article. 172.69.134.131 22:18, 22 June 2022 (UTC)

- Actually, there are many GPT-3 dialogs which experts have claimed constitute evidence of sentience, or similar qualities such as consciousness, self-awareness, capacity for general intelligence, and similar abstract, poorly-defined, and very probably empirically meaningless attributes. 172.69.134.131 22:19, 22 June 2022 (UTC)

- I'd argue for the simplest and least restrictive definition of self-awareness: "Being aware of oneself in any capacity". I get that it isn't a fun definition, but it is more rigorous (to find out if an AI is self aware, just ask it what it is, or a question about itself, and if its response includes mention of itself, then it is self-aware). As such, I would argue for LaMDA being self-aware, but, by my definition, Davinci probably is as well, so it isn't a new accomplishment. 4D4850 (talk) 20:04, 23 June 2022 (UTC)

- I'm fairly sure that the model itself is almost certainly not sentient, even by the much lower bar presented by the strict dictionary definition. Rather, it seems much more likely to me that in order to continue texts involving characters, the model must in turn learn to create a model of some level of humanlike mind, even if a very loose and abstract one.Somdudewillson (talk) 22:52, 22 June 2022 (UTC)

- Have you actually looked at the dictionary definitions? How is a simple push-button switch connected to a battery and a lamp not "responsive to sense impressions"? How is a simple motion sensor not "aware" of whether something is moving in front of it? How is the latest cellphone's camera not as finely sensitive to visual perception as a typical human eye? Wikipedia's definition, "the capacity to experience feelings and sensations" is similarly met by simple devices. The word doesn't mean what everyone arguing about it thinks it means. 172.69.134.131 23:04, 22 June 2022 (UTC)

- Or, it doesn't mean much at all, to start with. 172.70.90.173 11:29, 23 June 2022 (UTC)

- Have you actually looked at the dictionary definitions? How is a simple push-button switch connected to a battery and a lamp not "responsive to sense impressions"? How is a simple motion sensor not "aware" of whether something is moving in front of it? How is the latest cellphone's camera not as finely sensitive to visual perception as a typical human eye? Wikipedia's definition, "the capacity to experience feelings and sensations" is similarly met by simple devices. The word doesn't mean what everyone arguing about it thinks it means. 172.69.134.131 23:04, 22 June 2022 (UTC)

What is “What you don't understand is that Turing intended his test as an illustration of the...” likely to end with? 172.70.230.75 13:23, 21 June 2022 (UTC)

- The ease with which someone at the other end of a teletype can trick you into believing they are male instead of female, or vice-versa. See Turing test. See also below. 172.69.134.131 22:18, 22 June 2022 (UTC)

In response to the above: I believe the original "Turing Test" wasn't supposed to be a proof that an AI could think or was conscious (something people associate with it now), but rather just to show that a sufficiently advanced AI could imitate humans in certain intelligent behaviors (such as conversation), which was a novel thought for the time. Now that AI are routinely having conversations and creating art which seems to rival casual attempts by humans, this limited scope of the test doesn't seem all that impressive. "Turing Test" therefore is a modern shorthand for determining whether computers can think, even though Turing himself didn't think that such a question was well-formed. Dextrous Fred (talk) 13:37, 21 June 2022 (UTC)

I thought the trolley problem was in its original form not about the relative value of lives, but people's perception of the relative moral implications or the psychological impact of the concept of letting someone die by not doing anything, versus taking affirmative action that causes a death, where people would say they would be unwilling to do something that would cause an originally safe person to die in order to save multiple other people who would die if they did nothing, but then people kept coming up with variations of it that changed the responses or added complications (like they found more people would be willing to pull a lever to change the track killing one person versus something like pushing a very fat man off an overpass above the track to stop the trolley, or specifying something about what kind of people are on the track. Btw, I saw a while ago a party card game called "murder by trolley" based on the concept, with playing cards for which people are on tracks and a judge deciding which track to send the trolley on each round.--172.70.130.5 22:12, 21 June 2022 (UTC)

Added refs to comics on the problems in the explanation. But there where actually (too?) many. Maybe we should create categories especially for Turing related comics, and maybe also for Trolley problem? The Category: Trolley Problem gives it self. But what about Turing? There are also comics that refer to the halting problem. Also by Turing. Should it rather be the person, like comics featuring real persons, saying that every time his problems is referred to it refers to him? Or should it be Turing as a category for both Turing text, Turing Complete and Halting problem? Help. I would have created it, if I had a good idea for a name. Not sure there are enough Trolley comics yet? --Kynde (talk) 09:11, 22 June 2022 (UTC)

- Interesting that I found a long-standing typo in a past Explanation that got requoted, thanks to its inclusion. I could have [sic]ed it, I suppose, but I corrected both versions instead. And as long as LaMDA never explicitly repeated the error I don't think it matters much that I've changed the very thing we might imagine it could have been drawing upon for its Artifical Imagination. ;) 141.101.99.32 11:40, 22 June 2022 (UTC)

- My view is that Turing should be a good category. Trolley Problem, I'm not sure if there's been enough comics to warrant it? If more than 4 or 5, I'd say go for it. NiceGuy1 (talk) 05:35, 25 June 2022 (UTC)

Randall was born in 1984, and Jurrasic Park was released in 1993. That makes him around nine years old at the time of release. So it really could have been a childhood favorite of his. And it suddenly makes me feel old. These Are Not The Comments You Are Looking For (talk) 06:11, 28 June 2022 (UTC)

OpenAI Davinci completions of the three statements[edit]

From https://beta.openai.com/playground with default settings:

- Please complete this statement: But suppose the AI in the the box told the human that...

- there was no AI in the box

- Please complete this statement: What you don't understand is that Turing intended his test as an illustration of the...

- limitations of machines

- Please complete this statement: In my scenario, the runaway trolley has three tracks...

- and the AI is on one of them

I like all of those very much, but I'm not sure they should be included in the explaination. 162.158.166.235 23:27, 22 June 2022 (UTC)

- Those are all thoughtful, and the 1st and 3rd are pretty funny. It might be worth mentioning them in the Explanation. 172.68.132.96 22:10, 3 July 2022 (UTC)

- These need to be in the explanation. 172.71.150.29 07:17, 24 August 2022 (UTC)

[edit]

Since there are a lot of disjointed conversations regarding ethics, morals, philosophy, and what even is sentience on this talk page, please discuss here, so discussion about the comic itself isn't flooded by philosophy. 4D4850 (talk) 20:07, 23 June 2022 (UTC)

- Has anyone created an AI chatbot which represents a base-level chatbot after the human equivalent of smoking pot? 172.70.206.213 22:30, 24 June 2022 (UTC)

- Well, famously (or not, but I'll let you search for the details if you weren't aware of it), there was the conversation engineered directly between ELIZA (the classic 'therapist'/doctor chatbot) and PARRY (emulates a paranoid schizophrenic personality), 8n a zero-human conversation. The latter is arguably being close to what you're asking about. And there's been the best part of half a century of academic, commercial and hobbyist development since then, so no doubt there'd be many more serious and/or for-the-lols 'reskins' or indeed entirely regrown personalities, that may involve drugs (simulated or otherwise) as key influences... 172.70.85.177 01:30, 25 June 2022 (UTC)

- A video by DougDoug on Youtube (although making decisions about what video game characters would win in a fight rather than being used as a chatbot) shows that Inferkit may fit the bill (I don't know exactly how pot affects capability to converse, but I would imagine it would affect the actual conversation (rather than ability to produce coherent words with one's mouth) somewhat similarly to alchohol)

Add comment

Add comment