2939: Complexity Analysis

| Complexity Analysis |

Title text: PERPETUALLY OPTIMISTIC CASE: Early in the execution, our research group makes a breakthrough in proving P=NP. |

Explanation[edit]

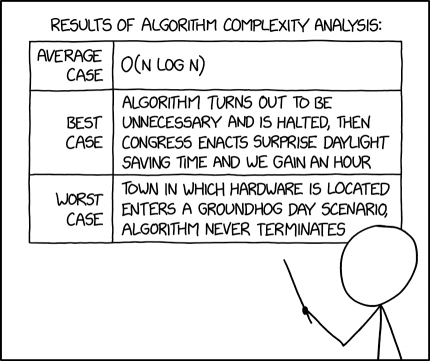

Cueball is teaching about an algorithm's complexity. The average-case complexity of the algorithm is written in Big O notation as O(n log n), expressing the limiting behavior of the algorithm's runtime as its number of inputs grows larger and larger.

The comic's joke involves taking the terms "best case" and "worst case" far more broadly and literally than intended. Cueball presents not just the best/worst cases for the data input into the function, but also the global environment as a whole, taking in factors such as the United States Congress which should fall far outside the algorithm's scope.

In particular, the joke regards the analysis of a closed system, which is common in engineering. An algorithm's "best case" is typically its runtime when its inputs have optimal values and it runs in as little time as possible. One example would be a sorting algorithm that is called with an already-sorted list of numbers; an algorithm may only need to check each item in the list, in one pass, to confirm this, compared with having to compare an arbitrary number of items against an arbitrary number of others across a number of cycles. The worst case would be when a list is 'unsorted' in a way that presents the maximum number of challenges and actions to the sorting algorithm (possibly, but not necessarily, when presented with the initial list exactly in the wrong order/reversed). These two limits can each be given by an O-notation, but a single O-notation generally indicates the mean complexity of operation encountered for all inputs.

The joke here is that not only does this algorithm 'run' quicker than what would otherwise be considered its best case scenario, by being terminated early because it is deemed to be 'unnecessary', but its runtime appears to be an hour shorter still because of an act of Congress changing daylight saving time, giving it an end time (in local time) that is an hour less than it would have been under other circumstances. Potentially this would result in an end time that is recorded as earlier than its start time (depending on how the times are handled), and therefore an apparently negative 'runtime'. Daylight saving time is a recurrent theme on xkcd, and it is clear that Randall is not a fan, so Congress making surprise DST changes is another way for Randall to mock the concept.

The "worst case" refers to the movie Groundhog Day, in which the same events occur over and over in a sort of time loop. (This movie has been referenced before in 1076: Groundhog Day.) If the hardware running the algorithm is present in this kind of loop then it may also reset to a previous time before it gets finished, meaning the algorithm would never terminate. This gives rise to a philosophical question about the movie as to whether the whole world is reset after every day, or just the town where the movie takes place. If it is just the town, and you could still connect to their hardware from outside, then from that perspective the algorithm would appear to be taking an interminably long time to run. If the whole world resets, since people (aside from the movie's main character) do not experience the reset, it would only appear to take as long as it does once the last (non-resetting cycle) leads it into the expected following day.

This may be an indirect reference to the halting problem, a famous problem in computer science. The halting problem is undecidable, meaning that no general algorithm can tell whether a given algorithm will halt, but the widely accepted traditional proof of this relies on external action on details of a system considered closed.

The title text refers to perhaps an even more famous problem in computer science: P versus NP. This asks whether every problem whose solution can be quickly verified (in nondeterministic polynomial time, NP) can also be quickly solved (in polynomial time, P). The P-versus-NP problem is one of the seven Millennium Prize Problems, and as such has a $1 million prize for its solution. Presumably, the problem discussed here is in NP, so if P=NP, its worst-case runtime would be some polynomial O(nk). However, P vs. NP is a Millennium Prize Problem for a reason; most computer scientists expect that P≠NP, so hoping for a breakthrough in proving P=NP is "perpetually optimistic". This may be a reference to optimism bias and the planning fallacy, whereby people tend to assume that the most favourable outcome will be the most likely.

Transcript[edit]

- [Cueball is holding a presentation pointer stick, pointing to a table behind him that towers above him. The table has a heading above it and then two columns and three rows. The first column is slim and the second much broader.]

- [Table Heading]

- Results of algorithm complexity analysis:

- [Row 1]

- Average case

- O(n log n)

- [Row 2]

- Best case

- Algorithm turns out to be unnecessary and is halted, then Congress enacts surprise daylight saving time and we gain an hour

- [Row 3]

- Worst case

- Town in which hardware is located enters a Groundhog Day scenario, algorithm never terminates

Discussion

I could be mistaken, but I think the "Best case" doesn't actually describe a situation where the algorithm takes the minimum amount of time. Rather, it describes that the algorithm wasn't necessary in the first place, possibly due to something like the list incidentally already being sorted. 172.68.23.74 23:25, 29 May 2024 (UTC)

- If you want to check that it's sorted, then you need to traverse the entire list at least once (worst case is that the list is in fact sorted and you need to run through the whole thing) O(n). The best case example would be like checking for orderliness and finding the first two items out of order and quitting. THEN congress enacts the time shift and you could have taken some "negative" amount of time to run that "check_if_sorted" routine. 172.69.71.134 14:36, 30 May 2024 (UTC)

- I think the joke is that someone externally decides that the algorithm is redundant, and so terminates it before completion (or never runs it at all).172.70.162.185 17:05, 30 May 2024 (UTC)

I think, in the best case scenario the Congress would need to make a surprise revert of Daylight Saving Time to really gain an hour. As during Daylight Saving the clock is set into the future it still would be virtually one hour later if suddenly Daylight Saving starts. But if it stops suddendly, you gain one hour on the clock. 162.158.94.238 05:56, 30 May 2024 (UTC)

Sounds like a matter of conventional definition among those familiar with Deep Algorithm Magicks. As a mere initiate, I'd say that defining an algorithm's performance in terms of factors outside the algorithm's context, such as the possibility that it might not need to run at all, brings in a host of reference problems that I'd rather not take up arms against.162.158.41.121 06:14, 30 May 2024 (UTC)

I'd say using Big-O for the average case if a very bad one exists is NOT an abuse. Big-O in first place defines the behavior of a *function*, not a set of functions. Thus, I wouldn't have the slightest problems if a publication writes, say, "Algorithm A takes O(n) steps if x!=y, but unfortunately, O(n^n) steps if x=y, which happens very rarely..." 172.71.160.70 07:35, 30 May 2024 (UTC)

I think the table in the Transcript section that reproduces the table from the comic should be moved to the Explanation section and rewritten in paragraph form in the Transcript section. We only include text within the Transcript section to help vision-impaired readers. Ianrbibtitlht (talk) 12:40, 30 May 2024 (UTC)

- It should be re-written for the transcript. I'm not convinced that reproducing it in the explanation would add anything of value to that though.172.69.195.5 12:46, 30 May 2024 (UTC)

- You're not wrong! Ianrbibtitlht (talk) 13:10, 30 May 2024 (UTC)

"[...] conventionally closed systems are now behaving in open manners [...]" - unless this is a badly phrased way of saying something like "real-world engineering is always more complicated than a simple technical analysis would suggest", I think these parts of the explanation are going way off base. 172.70.162.186 19:02, 30 May 2024 (UTC)

Interesting he‘s not using theta(…) and Omega(…) but O(…) only. -- Grimaldi (talk) 20:03, 24 June 2024 (please sign your comments with ~~~~)