Difference between revisions of "1002: Game AIs"

| Line 50: | Line 50: | ||

:The below games are incredibly difficult to "solve" due to the near-infinite number of possible positions. Computers built in the early 21st century would take years to calculate a single "ideal" move. Worse, the human opponent has the ability to "bluff"; that is, to make a bad move, thus baiting the computer into a trap. Complex algorithms have been devised to make moves in a reasonable timeframe, but so far they are all highly vulnerable to bluffing. As mentioned in the comic, focused research and development is working on refining these algorithms to play the games better. | :The below games are incredibly difficult to "solve" due to the near-infinite number of possible positions. Computers built in the early 21st century would take years to calculate a single "ideal" move. Worse, the human opponent has the ability to "bluff"; that is, to make a bad move, thus baiting the computer into a trap. Complex algorithms have been devised to make moves in a reasonable timeframe, but so far they are all highly vulnerable to bluffing. As mentioned in the comic, focused research and development is working on refining these algorithms to play the games better. | ||

| − | *'''{{w|StarCraft}}''' is a military science fiction real-time strategy video game. The game revolves around three species fighting for dominance in a distant part of the Milky Way galaxy known as the Koprulu Sector: the Terrans, humans exiled from Earth skilled at adapting to any situation; the Zerg, a race of insectoid aliens in pursuit of genetic perfection, obsessed with assimilating other races; and the Protoss, a humanoid species with advanced technology and psionic abilities, attempting to preserve their civilization and strict philosophical way of living from the Zerg. While even average Starcraft players can defeat the AIs that originally shipped with the games, Starcraft has since been adopted as a standard benchmark for AI research, largely because of its excellent balance. Thanks to that attention, computers can now challenge some expert players, and the trend does not look promising for human players <br/>'''Update:''' In a series of test matches held on December 19, 2018, [https://deepmind.com/blog/alphastar-mastering-real-time-strategy-game-starcraft-ii/ AlphaStar decisively beat Team Liquid’s Grzegorz "MaNa" Komincz, one of the world’s strongest professional StarCraft players]. However, this was on a certain map in a certain matchup, and AlphaStar was cheating. Since then, Google has released AlphaStar on ladder under a series of "barcode usernames" (made of 1s and lowercase Ls) and the agents have ranked to Master and Grandmaster... but one still lost to Serral, one of the world's best players, recently. | + | *'''{{w|StarCraft}}''' is a military science fiction real-time strategy video game. The game revolves around three species fighting for dominance in a distant part of the Milky Way galaxy known as the Koprulu Sector: the Terrans, humans exiled from Earth skilled at adapting to any situation; the Zerg, a race of insectoid aliens in pursuit of genetic perfection, obsessed with assimilating other races; and the Protoss, a humanoid species with advanced technology and psionic abilities, attempting to preserve their civilization and strict philosophical way of living from the Zerg. While even average Starcraft players can defeat the AIs that originally shipped with the games, Starcraft has since been adopted as a standard benchmark for AI research, largely because of its excellent balance. Thanks to that attention, computers can now challenge some expert players, and the trend does not look promising for human players <br/>'''Update:''' In a series of test matches held on December 19, 2018, [https://deepmind.com/blog/alphastar-mastering-real-time-strategy-game-starcraft-ii/ AlphaStar decisively beat Team Liquid’s Grzegorz "MaNa" Komincz, one of the world’s strongest professional StarCraft players]. However, this was on a certain map in a certain matchup, and AlphaStar was cheating. Additionally, Mana managed to exploit an AI flaw to eke out a win in the last match. Since then, Google has released AlphaStar on ladder under a series of "barcode usernames" (made of 1s and lowercase Ls) and the agents have ranked up to Master and Grandmaster... but one still lost to Serral, one of the world's best players, recently. |

*'''{{w|Poker}}''' is a family of card games involving betting and individualistic play whereby the winner is determined by the ranks and combinations of their cards, some of which remain hidden until the end of the game. It is also, however, a game of deception and intimidation, the ubiquitous "poker face" being considered the most important part of the game.<br/>'''Update:''' In a 20-day poker tournament from January 11 to 31, 2017, the poker AI {{w|Libratus}} [https://www.theverge.com/2017/1/31/14451616/ai-libratus-beat-humans-poker-cmu-tournament won against four top-class human poker players]. | *'''{{w|Poker}}''' is a family of card games involving betting and individualistic play whereby the winner is determined by the ranks and combinations of their cards, some of which remain hidden until the end of the game. It is also, however, a game of deception and intimidation, the ubiquitous "poker face" being considered the most important part of the game.<br/>'''Update:''' In a 20-day poker tournament from January 11 to 31, 2017, the poker AI {{w|Libratus}} [https://www.theverge.com/2017/1/31/14451616/ai-libratus-beat-humans-poker-cmu-tournament won against four top-class human poker players]. | ||

Revision as of 04:44, 19 September 2019

| Game AIs |

Title text: The top computer champion at Seven Minutes in Heaven is a Honda-built Realdoll, but to date it has been unable to outperform the human Seven Minutes in Heaven champion, Ken Jennings. |

Explanation

To understand the comic, you have to understand what the games are, so let's go (but first, the years in parenthesis in the comic are the year that the game was mastered by a computer):

Solved

- These games are considered "solved", meaning the ideal maneuver for each game state (Tic-Tac-Toe, Connect Four) or each of the limited starting positions (Checkers) has already been calculated. Computers aren't so much playing as they are recalculating the list of ideal maneuvers. The same could be said for the computer's human opponent, just at a slower pace.

- Tic-tac-toe or Noughts and Crosses in most of the rest of the British Commonwealth countries is a pencil-and-paper game for two players, X and O, who take turns marking the spaces in a 3×3 grid. This game nearly always ends in a tie, regardless of whether humans or computers play it, because the total number of positions is small.

- Nim is a mathematical game of strategy in which two players take turns removing objects from distinct heaps. On each turn, a player must remove at least one object, and may remove any number of objects provided they all come from the same heap.

- Ghost is a spoken word game in which players take turns adding letters to a growing word fragment. The loser is the first person who completes a valid word or who creates a fragment that cannot be the start of a word. Randall himself has written a perfect solution to Ghost, which he posted on his blog. Depending on the dictionary used, either the first player can always force a win, or the second player can.

- Connect Four (or Captain's Mistress, Four Up, Plot Four, Find Four, Fourplay, Four in a Row, Four in a Line) is a two-player game in which the players first choose a color and then take turns dropping their colored discs from the top into a seven-column, six-row vertically-suspended grid.

- Gomoku (or Gobang, Five in a Row) is an abstract strategy board game. It is traditionally played with go pieces (black and white stones) on a go board (19x19 intersections); however, because once placed, pieces are not moved or removed from the board, gomoku may also be played as a paper and pencil game. This game is known in several countries under different names.

- Black plays first, and players alternate in placing a stone of their color on an empty intersection. The winner is the first player to get an unbroken row of five stones horizontally, vertically, or diagonally.

- Checkers (in the United States, or draughts in the United Kingdom) is a group of strategy board games for two players which involve diagonal moves of uniform game pieces and mandatory captures by jumping over opponent pieces.

Computers Beat Humans

- The below games have not been "solved". Some of them may be solved some day, but the large number of possible moves has so far prevented this from being done. Others cannot be "solved" due to the influence of randomness or the existence of multiple "ideal" maneuvers for each position. That said, a computer's faster reaction time, higher degree of consistency in making the right decision, and reduced risk of user error make the computer objectively better than the human opponent in nearly all situations.

- Scrabble is a word game in which two to four players score points by forming words from individual lettered tiles on a gameboard marked with a 15-by-15 grid.

- CounterStrike most likely refers to the popular multiplayer shooter video game about terrorists and counter-terrorists. Counter-Strike is notorious for the large variety of cheating tools that have been made for it; a computer would have essentially perfect accuracy and reflexes, essentially making it the aimbot from hell. It is theoretically possible for a skilled player to beat an AI, but it would be extremely difficult to do so.

- Beer pong (or Beirut) is a drinking game in which players throw a ping pong ball across a table with the intent of landing the ball in a cup of beer on the other end.

- Here's the video of the University of Illinois robot mentioned in the comic.

- Reversi (marketed by Pressman under the trade name Othello) is a board game involving abstract strategy and played by two players on a board with 8 rows and 8 columns and a set of distinct pieces for each side. Pieces typically are disks with a light and a dark face, each face belonging to one player. The player's goal is to have a majority of their colored pieces showing at the end of the game, turning over as many of their opponent's pieces as possible.

- Chess is a two-player board game played on a chessboard, a square-checkered board with 64 squares arranged in an eight-by-eight grid. Each player begins the game with sixteen pieces: one king, one queen, two rooks, two knights, two bishops, and eight pawns, each of these types of pieces moving differently.

- The note mentions "the first game to be won by a chess-playing computer against a reigning world champion under normal chess tournament conditions", in the Deep Blue versus Garry Kasparov match on February 10, 1996, and the Ponomariov vs Fritz game in the Man vs Machine World Team Championship on November 21, 2005, considered the "last win by a human against top computer".

- Jeopardy! is an American quiz show featuring trivia in history, literature, the arts, pop culture, science, sports, geography, wordplay, and more. The show has a unique answer-and-question format in which contestants are presented with clues in the form of answers, and must phrase their responses in question form.

- Ken Jennings, mentioned in the title text, is a famous Jeopardy champion who was beaten by Watson, an IBM computer. This was an exhibition match featuring Jennings, Brad Rutter, and Watson that took place in February 2011.

Humans Beat Computers

- The below games are incredibly difficult to "solve" due to the near-infinite number of possible positions. Computers built in the early 21st century would take years to calculate a single "ideal" move. Worse, the human opponent has the ability to "bluff"; that is, to make a bad move, thus baiting the computer into a trap. Complex algorithms have been devised to make moves in a reasonable timeframe, but so far they are all highly vulnerable to bluffing. As mentioned in the comic, focused research and development is working on refining these algorithms to play the games better.

- StarCraft is a military science fiction real-time strategy video game. The game revolves around three species fighting for dominance in a distant part of the Milky Way galaxy known as the Koprulu Sector: the Terrans, humans exiled from Earth skilled at adapting to any situation; the Zerg, a race of insectoid aliens in pursuit of genetic perfection, obsessed with assimilating other races; and the Protoss, a humanoid species with advanced technology and psionic abilities, attempting to preserve their civilization and strict philosophical way of living from the Zerg. While even average Starcraft players can defeat the AIs that originally shipped with the games, Starcraft has since been adopted as a standard benchmark for AI research, largely because of its excellent balance. Thanks to that attention, computers can now challenge some expert players, and the trend does not look promising for human players

Update: In a series of test matches held on December 19, 2018, AlphaStar decisively beat Team Liquid’s Grzegorz "MaNa" Komincz, one of the world’s strongest professional StarCraft players. However, this was on a certain map in a certain matchup, and AlphaStar was cheating. Additionally, Mana managed to exploit an AI flaw to eke out a win in the last match. Since then, Google has released AlphaStar on ladder under a series of "barcode usernames" (made of 1s and lowercase Ls) and the agents have ranked up to Master and Grandmaster... but one still lost to Serral, one of the world's best players, recently.

- Poker is a family of card games involving betting and individualistic play whereby the winner is determined by the ranks and combinations of their cards, some of which remain hidden until the end of the game. It is also, however, a game of deception and intimidation, the ubiquitous "poker face" being considered the most important part of the game.

Update: In a 20-day poker tournament from January 11 to 31, 2017, the poker AI Libratus won against four top-class human poker players.

- Arimaa is a two-player abstract strategy board game that can be played using the same equipment as chess. Arimaa was designed to be more difficult for artificial intelligences to play than chess. Arimaa was invented by Omar Syed, an Indian American computer engineer trained in artificial intelligence. Syed was inspired by Garry Kasparov's defeat at the hands of the chess computer Deep Blue to design a new game which could be played with a standard chess set, would be difficult for computers to play well, but would have rules simple enough for his then four-year-old son Aamir to understand.

Update: on April 18, 2015, a computer won the "Arimaa Challenge", so this comic is now out of date with respect to Arimaa; it should move above Starcraft or Jeopardy!.

- Go is an ancient board game for two players that originated in China more than 2,000 years ago. The game is noted for being rich in strategy despite its relatively simple rules. The game is played by two players who alternately place black and white stones on the vacant intersections (called "points") of a grid of 19×19 lines (beginners often play on smaller 9×9 and 13×13 boards). The object of the game is to use one's stones to control a larger amount of territory of the board than the opponent. That computers would soon beat humans was the subject in 1263: Reassuring.

Update: on March 15, 2016, Google's AlphaGo beat Lee Sedol, who was often seen as the dominant human player over the last decade, 4 games to 1 in a widely viewed match, and Computer Go was expected to become more dominant over time. In May 2017, Google's AI AlphaGo defeated the world's top human Go player. This was referenced three months later in 1875: Computers vs Humans.

- Snakes and Ladders (or Chutes and Ladders) is an ancient Indian race game, where the moves are decided entirely by die rolls. A number of tiles are connected by pictures of ladders and snakes (or chutes) which makes the game piece jump forward or backward, respectively. Since the game is decided by pure chance, it occupies the limbo where a computer will always be exactly as likely to win as a human (indeed, Randall's arrow points at the dividing line between 'humans beat computers' and 'computers cannot compete').

Computers cannot compete

- Mao (or Mau) is a card game of the Shedding family, in which the aim is to get rid of all of the cards in hand without breaking certain unspoken rules. The game is from a subset of the Stops family, and is similar in structure to the card game Uno.

- The game forbids its players from explaining the rules, and new players are often told only "the only rule you may be told is this one." The ultimate goal of the game is to be the first player to get rid of all the cards in their hand. Computers would have a difficult time integrating into Mao either because they would know all the rules -- and thus be disqualified or simply ignored by the players -- or would need a complicated learning engine that quite simply doesn't exist.

- Seven Minutes in Heaven is a teenagers' party game first recorded as being played in Cincinnati in the early 1950s. Two people are selected to go into a closet or other dark enclosed space and do whatever they like for seven minutes. Sexual activities are allowed; however kissing and making out are more common.

- As the game is focused on human interaction, there's not a whole lot a modern computer can do in the closet. It would need some kind of robotic body in order to interact with its human partner, and emotion engines that could feel pleasure and displeasure in order to make decisions. The title text claims that Honda Motor Company has invented a "RealDoll" (sex toy shaped like a mannequin) with rudimentary Seven Minutes in Heaven capabilities, but they pale in comparison to a human's.

And finally

- Calvinball is a reference to the comic strip Calvin and Hobbes by Bill Watterson.

- Calvinball is a game played by Calvin and Hobbes as a rebellion against organized team sports; according to Hobbes, "No sport is less organized than Calvinball!" Calvinball was first introduced to the readers at the end of a 1990 storyline involving Calvin reluctantly joining recess baseball. It quickly became a staple of the comic afterwards.

- The only hint at the true creation of the game ironically comes from the last Calvinball strip, in which a game of football quickly devolves into a game of Calvinball. Calvin remarks that "sooner or later, all our games turn into Calvinball," suggesting a similar scenario that directly led to the creation of the sport. Calvin and Hobbes usually play by themselves, although in one storyline Rosalyn (Calvin's baby-sitter) plays in return for Calvin doing his homework, and plays very well once she realizes that the rules are made up on the spot.

- The only consistent rules state that Calvinball may never be played with the same rules twice, and you need to wear a mask, no questions asked. Scoring is also arbitrary, with Hobbes at times reporting scores of "Q to 12" and "oogy to boogy." The only recognizable sports Calvinball resembles are the ones it emulates (i.e., a cross between croquet, polo, badminton, capture the flag, and volleyball.)

- Long story short, the game is a manifestation of pure chaos and the human imagination, far beyond the meager capabilities of silicon and circuitry, at least so far.

Transcript

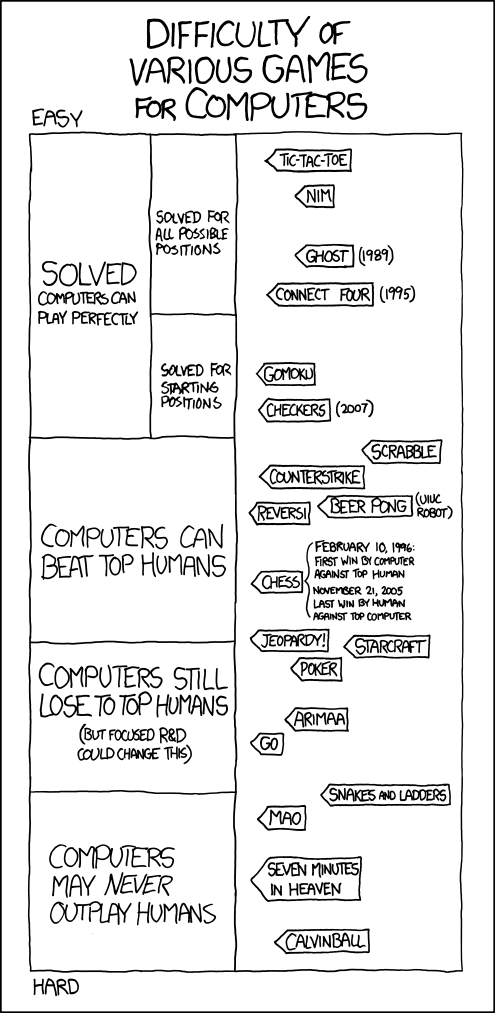

- [A diagram with a caption above the diagram. The left column describes various levels of skill for the most capable computers in decreasing performance against humans. The right side lists games in each particular section, in increasing game difficulty. There are labels denoting the hard and easy ends of the diagram.]

- Caption: Difficulty of Various Games for Computers

- Top of Diagram: Easy

- Solved Computers can play perfectly

- Solved for all possible positions

- Tic-tac-toe

- Nim

- Ghost (1989)

- Connect Four (1995)

- Solved for starting positions

- Gomoku

- Checkers (2007)

- Computers can beat top humans

- Scrabble

- CounterStrike

- Beer Pong (UIUC robot)

- Reversi

- Chess

- February 10, 1996: First win by computer against top human

- November 21, 2005: Last win by human against top computer

- Jeopardy

- Computers still lose to top humans (but focused R&D could change this)

- StarCraft

- Poker

- Arimaa

- Go

- Snakes and Ladders

- Computers may never outplay humans

- Mao

- Seven Minutes in Heaven

- Calvinball

- Bottom of Diagram: Hard

Discussion

Mornington Crescent would be impossible for a computer to play, let alone win... -- 188.29.119.251 (talk) (please sign your comments with ~~~~) It is unclear which side of the line jeopard fall upon. Why so close to the line I wonder. DruidDriver (talk) 01:04, 16 January 2013 (UTC)

- Because of Watson (computer). (Anon) 13 August 2013 24.142.134.100 (talk) (please sign your comments with ~~~~)

Could the "CounterStrike" be referring instead to the computer game which can have computer-controlled players? --131.187.75.20 15:49, 29 April 2013 (UTC)

- I agree, this is far more likely. 100.40.49.22 10:21, 11 September 2013 (UTC)

On the old blog version of this article, a comment mentioned Ken tweeting his method right after this comic was posted. He joked that they would asphyxiate themselves to actually see heaven for seven minutes. I don't know how to search for tweets, or if they even save them after so much time, but I thought it should be noted. 108.162.237.161 07:11, 27 October 2014 (UTC)

I disagree about the poker part. Reading someone's physical tells is just a small part of the game. Theoretically there is a Nash equilibrium for the game, the reason why it hasn't been found is that the amount of ways a deck can be shuffled is astronomical (even if you just count the cards that you use) and you also have to take into account the various betsizes. A near perfect solution for 2 player limit poker has been found by the Cepheus Poker Project: http://poker.srv.ualberta.ca/.

~ Could the description of tic-tac-toe link to xkcd 832 which explains the strategy? 162.158.152.173 13:13, 27 January 2016 (UTC)

Saying that computers are very close to beating top humans as of January 2016 is misleading at best. There is not enough details in the BBC article, but it sounds like the Facebook program has about a 50% chance of beating 5-dan amateurs. In other words, it needs a 4-stone handicap (read: 4 free moves) to have a 50% chance to win against top-level amateurs, to say nothing about professionals. If a robotic team could have a 50% chance to beating Duke University at football (a skilled amateur team), would you say they were very close to being able to consistently beat the Patriots (a top-level professional)? If anything that underestimates the skill difference in Go, but the general point stands. 173.245.54.38 (talk) (please sign your comments with ~~~~)

- How about bearing one of the top players five times in a row and being scheduled to play against the world champion in March? http://www.engadget.com/2016/01/27/google-s-ai-is-the-first-to-defeat-a-go-champion/ Mikemk (talk) 06:18, 28 January 2016 (UTC)

- However DeepMind ranked AlphaGo close to Fan Hui 2P and the distributed version has being at the upper tier of Fan's level. http://www.nature.com/nature/journal/v529/n7587/fig_tab/nature16961_F4.html

- The official games were 5-0 however the unofficial were 3-2. Averaging to 8-2 in favor of AlphaGo.

- Looking at http://www.goratings.org/ Fan Hui is ranked 631, while Lee Sedol 9P, whom is playing in March, is in the top 5.108.162.218.47 06:12 5 February 2016 (UTC)

- Original poster here (sorry, not sure how to sign). Okay, you all are right. Go AI has advanced a lot more than I had understood. I'm still curious how the game against Lee Sedol will go, but that that is even an interesting question shows how much Go AI has improved. 173.245.54.33 (talk) (please sign your comments with ~~~~)

Google's Alpha Go won, 3 games to none! Mid March 2016Saspic45 (talk) 03:06, 14 March 2016 (UTC)

Okay, for some reason they played all five games. Lee Sedol won game 4 as the computer triumphed 4-1.Saspic45 (talk) 08:44, 17 March 2016 (UTC)

Not shown in this comic: 1000 Blank White Cards, and, even further down, Nomic. KangaroOS 01:22, 19 May 2016 (UTC)

Is the transcript (currently in table format) accessible for blind users? Should it be? 162.158.58.237 10:48, 19 February 2017 (UTC)

At the very least the transcript needs to be fixed so that it factually represents the comic. Jeopardy is in the wrong spot with just a quick glance which is all I have time for here at work. 162.158.62.231 16:58, 24 August 2017 (UTC)

AlphaStar beat Mana pretty decisively, but it was cheating and Mana won the game where it wasn't, and it could only play on a certain map in Protoss vs Protoss. However, that was a while ago. Google dropped AlphaStar on ladder under barcode usernames, and it's been doing rather well... but Serral (one of the world's best players) recently beat it pretty decisively. 172.68.211.244 01:49, 19 September 2019 (UTC)

- In case anyone is checking up on the AlphaStar thing: AlphaStar definitely plays at a high human level in Starcraft 2 now (2020), without doing much that seems 'humanly impossible' (ie, like cheating, as it did during the MaNa matchup), but it's in relatively limited maps, and it not only loses fairly regularly if not most of the time to top-ranked humans like Serral, it also loses to essentially random grab-bags of very good players like Lowko on occasion. Like, Lowko's much better than me but he's not tournament-level good and he beat it.

- Also, technically, AlphaStar isn't even *one* program. It's an ensemble of many programs, each one specific to a different SC2 race and specializing in different strategies. Maybe if there were a 'seamless' amalgam where it were 'choosing' a strategy it could be arguably one program, but it's literally a totally separately trained neural network for each 'agent'.

- Furthermore, when you watch it play sometimes it does extremely stupid things like trap its tanks in its own base. SC2 is, at least this year, still a human endeavor at high tiers. 172.68.38.44 01:21, 26 July 2020 (UTC)

Who is Ken Jennings?

I feel like it should be relatively easy to make a computer program that can learn the rules of Mao without knowing them to begin with. There has to be some feedback: a player gets penalties if he breaks the rules. This can be used to write a self-learning algorithm.

- The tricky part is that rules in Mao aren't limited to a function that states whether or not you can play a card based on the cards already played. Rules can be about how you play the card, how you sit, what you say, what you do if you play a certain card, etc. Rules can also apply out of turn. You could be required to do something in reaction to another player doing something (e.g. congratulate a player if they play a King), or penalised for e.g. speaking to the player whose turn it currently is. In order for a computer to compete successfully, it would need to ingest a lot of peripheral information and run some sophisticated learning that accounts for far more than simply the state of the cards. Particularly within a regular group of players, there are rules that will be reused a lot, e.g. certain cards acting as Uno special cards, but there is no guarantee these will appear and players can make up arbitrary rules. --Tom

Sorry, but I just undid a new editor's addition of the text (for Snakes And Ladders) of "except that as the game has to be played on software so that an AI can participate, a (pseudo)-random number generator takes the place of physical dice in dictating players' movement, making it no longer truly random. Also, while physical snakes-and-ladders boards are fixed in their design, the graphical representation of these boards can be altered at will." - Firstly because it was bullet-point-added, when it wasn't really supposed to be, but then I decided it needed much more editing than I thought worthwhile. To bullet my thoughts, though:

- See the Beer Pong example for "AI in reality",

- Even if there's not a robot arm shaking a physical dice (which is pseudorandom itself, at least in a Newtonian perspective, but tends to be Ok) as long as the supposed-PRNG is not controlled/filtered by the AI then it's perfectly valid for use,

- Machine-vision is a thing. I'm sure there's a trivial way to make a generalised board-image-decoder to get around the artistic differences (possibly even machine learning, to make actual use of the AI).

....The main issue is that solving all these 'problems' leaves little for the backend 'gameplay and strategy' AI to do, as it must just pursue the path to victory (or not) that the dice/whatever formulaically dictate. So I want to honour the edit by mentioning it, but ultimately decided it should be undone. Pending perhaps a different approach to it, if anyone decides I was not fair (or correct) in my decision to do it this way. 172.70.162.147 13:09, 12 February 2022 (UTC)

If Snakes and Ladders and Seven Minutes in Heaven are mentioned, why not Game of Life? --162.158.159.132 17:24, 3 March 2024 (UTC)

- That's (as I suspected) a disambiguation page, making me still wonder whether you mean Conway's Game of Life (which is a bit open-ended) or The Game of Life (which, if I remember the gameplay well enough is basically going to be the same luck-based-'challenge' as Snakes And Ladders with an expanded set of thematic bells-and-whistles added).

- Such a confluence of names, however, makes me want to suggest Mastermind (board game) (well within 'solved' for just brute-force logic) or Mastermind (British game show) (you'd definitely need a Watson-level AI, though you probably you could zhoosh it up with a ChatGPT front-end). 172.71.242.160 19:04, 3 March 2024 (UTC)

- Conway's Game of Life is a zero-player game with no winners or losers. The Milton Bradley/Hasbro The Game of Life involves a lot of luck, but there is some player decisionmaking involved, unlike Snakes and Ladders. --208.59.176.206 00:38, 5 January 2026 (UTC)

I'm a little late to say this, but Update for Go: In early 2023, Kellin Pelrine defeated KataGo and Leela Zero, not by being a top player, but by employing an unusual strategy that the AIs couldn't detect, even though most human players could. (Admittedly, the strategy itself was found by yet another AI). [1] [2] Hhhguir (talk) 07:33, 6 October 2024 (UTC)

Update: Now 'AI' (well, LLM actually) learns to cheat playing chess 799571388 (talk) 11:40, 14 February 2025 (UTC)