1838: Machine Learning

| Machine Learning |

Title text: The pile gets soaked with data and starts to get mushy over time, so it's technically recurrent. |

Explanation[edit]

Machine learning is a method employed in automation of complex tasks. It usually involves creation of algorithms that deal with statistical analysis of data and pattern recognition to generate output. The validity/accuracy of the output can be used to give feedback to make changes to the system, usually making future results statistically better.

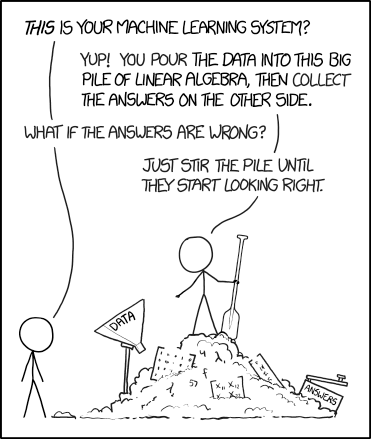

Cueball stands next to what looks like a pile of garbage (or compost), with a Cueball-like friend standing atop it. The pile has a funnel (labelled "data") at one end and a box labelled "answers" at the other. Here and there mathematical matrices stick out of the pile. As the friend explains to the incredulous Cueball, data enters through the funnel, undergoes an incomprehensible process of linear algebra, and comes out as answers. The friend appears to be a functional part of this system himself, as he stands atop the pile stirring it with a paddle. His machine learning system is probably very inefficient, as he is integral to both the mechanical part (repeated stirring) and the learning part (making the answers look "right").

The main joke is that, despite this description being too vague and giving no intuition or details into the system, it is close to the level of understanding most machine learning experts have of the many techniques in machine learning. 'Machine learning' algorithms that can be reasonably described as pouring data into linear algebra and stirring until the output looks right include support vector machines, linear regressors, logistic regressors, and neural networks. Major recent advances in machine learning often amount to 'stacking' the linear algebra up differently, or varying stirring techniques for the compost.

Composting[edit]

This comic compares a machine learning system to a compost pile. Composting is the process of taking organic matter, such as food and yard waste, and allowing it to decompose into a form that serves as fertilizer. A common method of composting is to mound the organic matter in a pile with a certain amount of moisture, then "stirring" the pile occasionally to move the less-decomposed material from the top to the interior of the pile, where it will decompose faster.

In large-scale composting operations, the raw organic matter added to the pile is referred to as "input". This cartoon implies a play on the term "input", comparing a compost input to a data input.

Title text[edit]

A recurrent neural network is a neural network where the nodes affect one another in cycles, creating feedback loops in the network that allow it to change over time. To put it another way, the neural network has 'state', with the results of previous inputs affecting how each successive input is processed. In the title text, Randall is saying that the machine learning system is technically recurrent because it "changes" (i.e. gets mushy) over time.

Transcript[edit]

[Cueball Prime holds a canoe paddle at his side and stands on top of a "big pile of linear algebra" containing a funnel labeled "data" and box labeled "answers". Cueball II stands to the left side of the panel.)]

Cueball II: This is your machine learning system?

Cueball Prime: Yup! You pour the data into this big pile of linear algebra, then collect the answers on the other side.

Cueball II: What if the answers are wrong?

Cueball Prime: Just stir the pile until they start looking right.

Discussion

Appearently, there is the issue of people "training" intelligent systems out of their gut feeling: Let's say for example a system should determine whether or not a person should be promoted to fill a currently vacant business position. If the system is taught by the humans currently in charge of that very decision, and it weakens the people the humnas would decline and stenghtens the one they wouldn't, all these people might do is feeding the machine their own irrational biases. Then, down the road, some candidate may be declined because "computer says so". One could argue that this, if it happens, is just bad usage and no inherent issue of machine learning itself, so I'm not sure if this thought can be connected to the comic. In my head, it's close to "stirring the pile until the answers look right". What do you people think? 162.158.88.2 05:39, 17 May 2017 (UTC)

It's a good point but I don't think it's relevant to the comic. 141.101.107.252 13:55, 17 May 2017 (UTC)

Up the creek *with* a paddle. 162.158.111.121 07:52, 17 May 2017 (UTC)

It's a compost pile! Stir it and keep it moist until something useful comes out. 162.158.75.64 11:40, 17 May 2017 (UTC) ]

Actually I doin't think the paddle has anything to do with canoes - paddles like that are often used when stirring large quantities. In Louisiana its called a crawfish or gumbo paddle

I think the entire paragraph that goes "One of the most popular paradigms of..." needs to be cleaned up to make it human readable. Nialpxe (talk) 12:09, 17 May 2017 (UTC)

The comment that SVMs would be a better paradigm, rather than neural networks, is kind of wrong. Anyone who's worked with neural networks knows they're still essentially a linear algebra problem, just with nonlinear activation functions. Play around with tensorflow (it's fun and educational!) and you'll find most of the linear algebra isn't abstracted away as it might be in Keras, SkLearn or Caret (R). That being said, interpretability is absolutely a problem with these complex models. This is as much because the world doesn't like conforming to the nice modernist notion of a sensible theory (ie. one that can be reduced to a nice linear relationship), but even things like L1 regularisation often leave you wondering "but how does it all fit together?". On the other hand, while methods like SVMs still have a bit of machine learning magic in resolving how its hyperplane divides the hyperspace (ie. the value is derived empirically, not theoretically), the results are typically human interpretable, for a given definition of interpretable. It's no y= wx + b, but it's definitely possible. Same same for most methods short of very deep neural nets with millions of parameters. Most machine learning experts I've met have a pretty good idea what is going on in the simpler models, such as CARTs, SVMs, boosted models etc. The only reason neural nets are blackbox-y is that there's a huge amount going on inside them, and it's too much effort to do more than analyse outputs! 172.68.141.142 22:43, 17 May 2017 (UTC)

- I remember from school that neural nets can get extremely hard to analyze even when they only contain five neurons. -- Hkmaly (talk) 03:20, 27 May 2017 (UTC)

Does anyone else think the topic may have been influenced by Google's recently (May 17) featured article about machine learning?[[1]] --162.158.79.35 12:17, 17 May 2017 (UTC)

- Google has been saying a lot about machine learning recently, particularly w.r.t. android. 141.101.107.30 04:43, 19 May 2017 (UTC)

Maybe one day bots will learn to create entire explanations for xkcd. 141.101.99.179 12:38, 17 May 2017 (UTC)

- Good, then maybe we won't have over-thought explanations anymore.

- "That was a joke, haha" Elektrizikekswerk (talk) 07:36, 18 May 2017 (UTC)

- I lovingly think of this site as "Over-Explain XKCD"172.68.54.112 17:44, 20 May 2017 (UTC)

The fuck is "Pinball"? 162.158.122.66 03:59, 19 May 2017 (UTC)

- Agree, expunged it. 162.158.106.42 08:38, 24 May 2017 (UTC)

On the topic of 'Stirring', I'm not sure why it's being associated with neural networks. It's a common thing in machine learning to randomize starting conditions to avoid local minima. This does exist in neural networks, as edge weights are typically randomized, but it's also the first step in many different algorithms, such as k-means where the initial centroid locations are randomized, or decision trees where random forests are sometimes used. 173.245.50.186 13:18, 19 May 2017 (UTC) sbendl

Fixing the explanation[edit]

Right now, the explanation has two parts, one that is simply trying to explain it for the casual reader, and another that goes into the details of machine/deep learning, linear algebra, neural networks etc. (I almost forgot composting!) The way the two parts are jumbled together makes no sense. Perhaps having a simple initial explanation with subsections for more detailed explanation of individual topics relevant to the comic would fix the mess. Nialpxe (talk) 14:08, 19 May 2017 (UTC)

I only came here to get an explanation of "recurrent," and I canʻt find it.

- seconded --Misterstick (talk) 11:29, 5 April 2018 (UTC)

- I'm a machine learning geek and I'll try to add an explanation of "recurrent", a few minutes. Singlelinelabyrinth (talk) 05:22, 27 July 2020 (UTC)

I like the use of "Cueball Prime" and "Cueball II" in the transcript. can someone make that a part of the style guide?

LLM-Era References (2023+)[edit]

This was just reposted on the "Marcus On AI" Substack, forwarded through Dave Farber's "Interesting People" list, in reference to LLM ChatGPT allegedly "going beserk". https://garymarcus.substack.com/p/chatgpt-has-gone-berserk https://ip.topicbox.com/groups/ip/T775bf6cac82b32a1/chatgpt-has-gone-berserk

172.70.47.58 03:19, 21 February 2024 (UTC)