882: Significant

| Significant |

Title text: So, uh, we did the green study again and got no link. It was probably a-- "RESEARCH CONFLICTED ON GREEN JELLY BEAN/ACNE LINK; MORE STUDY RECOMMENDED!" |

Explanation[edit]

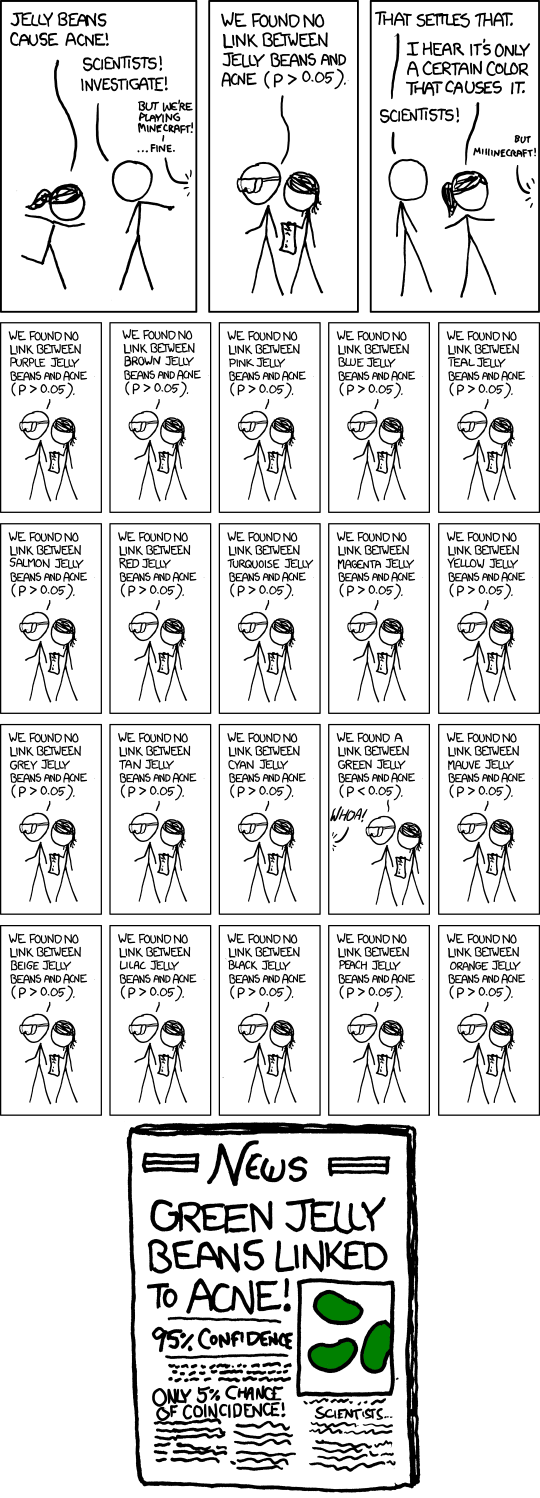

This comic is about data dredging (aka p-hacking), and the misrepresentation of science and statistics in the media. A girl with a black ponytail comes to Cueball with her claim that jelly beans cause acne, and Cueball then commissions two scientists (a man with goggles and Megan) to do some research on the link between jelly beans and acne. They find no link, but in the end the real result of this research is bad news reporting!

First, some basic statistical theory. Let’s imagine you are trying to find out if jelly beans cause acne. To do this you could find a group of people and randomly split them into two groups — one group who you get to eat lots of jelly beans and a second group who are banned from eating jelly beans. After some time you compare whether the group that eat jelly beans have more acne than those who do not. If more people in the group that eat jelly beans have acne, then you might think that jelly beans cause acne. However, there is a problem.

Some people will suffer from acne whether they eat jelly beans or not, and some will never have acne even if they do eat jelly beans. There is an element of chance in how many people prone to acne are in each group. What if, purely by chance, all the group we selected to eat jelly beans would have had acne anyway while those who didn’t eat jelly beans were the lucky sort of people who never get spots? Then, even if jelly beans did not cause acne, we would conclude that jelly beans did cause acne. Of course, it is very unlikely that all the acne prone people end up in one group by chance, especially if we have enough people in each group. However, to give more confidence in the result of this type of experiment, scientists use statistics to see how likely it is that the result they find is purely by chance. This is known as statistical hypothesis testing. Before we start the experiment, we choose a threshold known as the significance level. In the comic the scientists choose a threshold of 5 %. If they find that more of the people who ate jelly beans had acne and the chance it was a purely random result is less than 1 in 20, they will say that jelly beans do cause acne. If, however, the chance that their result was purely by random chance is greater than 5 %, they will say they have found no evidence of a link. The important point is this: there could still be a 1 in 20 chance that this result was purely a statistical fluke.

At first, the scientists do not want to stop playing Minecraft, but they do eventually start. Minecraft was previously referenced in 861: Wisdom Teeth.

The scientists find no link between jelly beans and acne (the probability that the result is by chance is more than 5 %, i. e. p > 0.05), but then Megan and Cueball ask them to see if only one color of jelly beans is responsible. They test 20 different colors, each at a significance level of 5 %.

This finding leads to a big newspaper headline saying Green Jelly Beans Linked To Acne where it is said that they have 95 percent confidence with only a 5 % chance of a coincidence. Unfortunately, while the p-values reported by the scientists are (presumably) mathematically correct, the wording in the newspaper is misleading and would only apply if green jelly beans were the only ones tested. Common sense should tell you that when you do a whole bunch of tests, it becomes much more likely that you’ll get a false positive result.

In the title text, we find out that the scientists repeated the experiment (another key part of the scientific method), but now they no longer find any evidence for the link between acne and green jelly beans. They try to tell the reporter something, maybe that it was probably a coincidence, but the reporters are not interested since that is not news, and refuse to listen. Instead, they make another major headline from the repeat study saying Research conflicted (which is not accurate, the scientists doubted their results and had their doubts confirmed) and recommend more study on the link (which is what the scientist just did).

To elaborate on the statistical theory behind this issue: If the probability that each trial gives a false positive result is 1 in 20, then by testing 20 different colors it is now likely that at least one jelly bean test will give a false positive. To be precise, the probability of having zero false positives in 20 tests is 0.9520 = 35.85 % while the probability of having at least 1 false positive in 20 tests is 64.15 % (the probability of having zero false positive in 21 tests (counting the test without color discrimination) is 0.9521 = 34.06 %). In scientific fields that perform many simultaneous tests on large amounts of data it is therefore common to adjust for the effect of multiple testing; typically by controlling the False Discovery Rate which is the number of (expected) false positives compared to all positive results (here, it would be 1/1 = 1). For this, you bundle your tests into a single “test of tests” and adjust your single-test p-values such that the chance of your “test of tests” reporting a significant result falls below a certain threshold. Typically, that threshold is 0.05 — the same as the conventional p-value for a single test, and it can be interpreted the same way: that only 1 in 20 “tests of tests” would report a result at this level of significance even if the null hypothesis were true. Applying the Benjamini–Hochberg procedure, the lowest p-value of a set of 20 tests would need to be smaller than (1/20) × 0.05 = 0.0025 to be accepted as significant. Such an adjustment would likely have prevented the situation depicted in the comic.

This general situation is (sadly) often an issue with more serious matters than jelly beans and acne — at any one time there are many studies about possible links between substances (e. g. red wine) and illness (e. g. cancer). Because only the positive results get reported, this limits the value any single study has — especially if the mechanism linking the two things is not known.

p-hacking and bad news reporting in real life[edit]

In 2015 some journalists demonstrated the same problem: just how gullible other news outlets are with the same sort of flawed “experimental design”: How, and why, a journalist tricked news outlets into thinking chocolate makes you thin - The Washington Post

Transcript[edit]

- [A girl with a black ponytail runs up to Cueball, who subsequently points off-panel where there are presumably scientists.]

- Girl with black ponytail: Jelly beans cause acne!

- Cueball: Scientists! Investigate!

- Scientist (off panel): But we're playing Minecraft!

- Scientist (off panel): ...Fine.

- [Two scientists. The man has safety goggles on, Megan has a sheet of notes.]

- Scientist with goggles: We found no link between jelly beans and acne (p > 0.05).

- [Back to the original two.]

- Cueball: That settles that.

- Girl with black ponytail: I hear it's only a certain color that causes it.

- Cueball: Scientists!

- Scientist (off screen): But Miiiinecraft!

- [20 identical small panels follow, in 4 rows of 5 columns. The exact same picture as in panel 2 above. The scientist with goggles states the results and Megan holds some notes in her hand. The only difference from panel to panel is the color and then in the 14th panel where the result is positive and there is an exclamation from off-panel.]

- Scientist with goggles: We found no link between purple jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between brown jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between pink jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between blue jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between teal jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between salmon jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between red jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between turquoise jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between magenta jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between yellow jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between grey jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between tan jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between cyan jelly beans and acne (p > 0.05).

- Scientist with goggles: We found a link between green jelly beans and acne (p < 0.05).

- Voice (off panel): Whoa!

- Scientist with goggles: We found no link between mauve jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between beige jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between lilac jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between black jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between peach jelly beans and acne (p > 0.05).

- Scientist with goggles: We found no link between orange jelly beans and acne (p > 0.05).

- [Newspaper front page with a picture with three green jelly beans. There are several sections with unreadable text below each of the last three readable sentences.]

- News

- Green Jelly Beans Linked To Acne!

- 95% Confidence

- Only 5% chance of coincidence!

- Scientists...

Discussion

Those lazy are playing minecraft instead of curing cancer! Lynch 'em! Davidy22[talk] 00:35, 11 January 2013 (UTC)

- But I heard that Minecraft cures cancer... ! Investigate! <off: cheers from active group, boos from the control group> 178.99.81.144 19:31, 30 April 2013 (UTC)

- You know this experiment isn't conducted properly when you know you're in the control group. Troy (talk) 05:24, 4 March 2014 (UTC)

Um, I take it that whoever explained this comic can't tell the difference between < and >, as the fact that the confidence was changed wasn't mentioned in the article... 76.246.37.141 23:19, 20 September 2013 (UTC)

- Yes, I also figured out this today, green is lower than 0.05, on other colors there is just a confidence that it's NOT lower than 0.05. The newspaper did add this remaining 19 panels to 95%. The article is marked as incomplete, it needs a major rewrite.--Dgbrt (talk) 19:12, 3 October 2013 (UTC)

This explanation seems to misinterpret α. α is the chance of rejecting a true null hypothesis, a false positive. The 5% here is α. The correct interpretation of it is that if the null hypothesis is true, there is a 5% chance that we will mistakenly reject it. P in "P<0.05" is the chance that, if the null hypothesis is true, a result as extreme as, or more extreme than, the result we get from this experiment. α is not the chance that, given our current data, the null hypothsis is true. We wish to know what that is, but we do not know.108.162.215.72 08:52, 16 May 2014 (UTC)

In layman's terms, the comic appears to misrepresent what "95% confidence" (p <0.05) means. The statistic "p < 0.05" means that when we find a correlation based on data, that correlation will be a false positive fewer than 5 percent of the time. In other words, when we observe the correlation in the data, that correlation actually exists in the real world at least 19 out of 20 times. It does not mean that 1 out of every 20 tests will produce a false positive. This comic displays a pretty significant failure in understanding of Bayesian mathematics. The 5% chance isn't a 5% chance that any test will produce a (false) positive; it's a 5% chance that a statistical positive is a false positive. 108.162.219.196 (talk) (please sign your comments with ~~~~)

- No, you are deeply mistaken. The comic and the comment above you are correct in saying that if the null hypothesis holds, 1 out of every 20 tests will produce a false positive: this is by definition of the p-value. The ratio of true positives to false positives can range anywhere from 0 to infinity, and there is unfortunately no way to predict it. 108.162.229.121 09:46, 27 January 2015 (UTC)

The explanation appears somewhat confused, as correctly noted in a couple of comments above. The most common misunderstanding of p-values is that they represent how likely it is that the observed correlation (or observed unequal outcomes, or apparent trend) came from chance. That is not what they represent - they represent the probability that results at least as extreme as those observed would have arisen by chance: 1) in a fictional world where chance was the only potential cause of the correlation/inequality/trend (a world in which the null hypothesis was true) AND 2) only one hypothesis was being tested. In the real world, other factors may be more or less plausible as explanations, and it takes judgement, not stats, to determine how likely it is that chance is the best explanation. The green jelly beans theory fails in terms of biological plausibility, so it is >99% likely to be a chance observation (regardless of the p-value). Also, given the large number of hypotheses being tested, the probability of at least one of them producing a p-value <0.05 is much greater than 5%; indeed, with 20 simultaneous hypotheses, we would expect about one to be significant at the p<0.05 level, on average. There is a huge difference between the prospective probability of a single hypothesis satisfying the p<0.05 threshold, and the probability of being able to find a retrospective hypothesis for which p<0.05. This is a case of post hoc cherry picking - the newspaper's emphasis on green jellybeans is post hoc, with the colour of interest chosen after the results were already in. 108.162.250.163 (talk) (please sign your comments with ~~~~)

Is the "e" in "News" supposed to look like an epsilon (and the "w" a rotated epsilon)? 108.162.250.222 15:00, 15 December 2014 (UTC)

- It's probably just a stylistic thing. GrandPiano (talk) 04:00, 28 January 2015 (UTC)

In the comic, they mention that there is a link between green jelly beans and acne. However, assuming there to be no real link, there is 50% chance that this link was caused by 95% confidence that green jelly beans help with acne.Mulan15262 (talk) 03:14, 12 November 2015 (UTC)

This comic was referenced in the book "How Not to be Wrong" by Jordan Ellenberg. SilverMagpie (talk) 20:41, 21 January 2017 (UTC)

One thing I've gleaned from this is that they apparently opened a bag of Jelly Bellys or Gimball's and tested them in whatever order. I say this because they hit colors you'd never see in the smaller-palette brands of jelly beans (brown, teal, salmon) before some very common colors (red, yellow, black, green). If it were me, I would probably have started with a smaller-palette brand, since their colors affect everyone who eats jelly beans, and not just the ones who go for the gourmet brands. Nyperold (talk) 12:58, 6 July 2017 (UTC)

This kind of error is why you use ANOVA. 162.158.63.238 20:21, 29 October 2018 (UTC)

Want to reiterate that using "95% confidence" for statistical significance means having a threshold of <.05 for the p-value, and the p-value is the probability noise alone would have generated a change this big or bigger. If all you ran all day long were a/a tests (randomly assign people to two groups but give them the exact same experience) then 5% of your tests would be stat sig for any given metric. However, the chance of at least one false positive over 20 tests is only 64% (1-.95^20), not 100%. But of course, you also might get MORE than 1 false positive in 20 experiments, so the expected value for the NUMBER of false positives after 20 experiments IS 1. If that hurt your brain, welcome to probability!