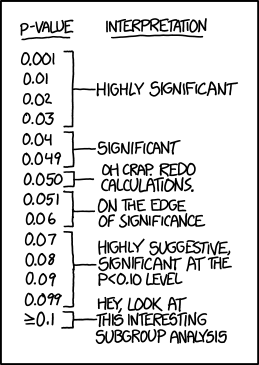

1478: P-Values

| P-Values |

Title text: If all else fails, use "significant at a p>0.05 level" and hope no one notices. |

Explanation

This comic plays on how scientific experiments interpret the significance of their data. P-value is a statistical measure whose meaning can be difficult to explain to non-experts, and is frequently wrongly understood (even in this wiki) as indicating how likely that the results could have happened by accident. Informally, a p-value is the probability under a specified statistical model that a statistical summary of the data (e.g., the sample mean difference between two compared groups) would be equal to or more extreme than its observed value.

By the standard significance level, analyses with a p-value less than .05 are said to be 'statistically significant'. Although the difference between .04 and .06 may seem minor, the practical consequences can be major. For example, scientific journals are much more likely to publish statistically significant results. In medical research, billions of dollars of sales may ride on whether a drug shows statistically significant benefits or not. A result which does not show the proper significance can ruin months or years of work, and might inspire desperate attempts to 'encourage' the desired outcome.

When performing a comparison (for example, seeing whether listening to various types of music can influence test scores), a properly designed experiment includes an experimental group (of people who listen to music while taking tests) and a control group (of people who take tests without listening to music), as well as a null hypothesis that "music has no effect on test scores". The test scores of each group are gathered, and a series of statistical tests are performed to produce the p-value. In a nutshell, this is the probability that the observed difference (or a greater difference) in scores between the experimental and control group could occur due to random chance, if the experimental stimulus has no effect. For a more drastic example, an experiment could test whether wearing glasses affects the outcome of coin flips - there would likely be some amount of difference between the coin results when wearing glasses and not wearing glasses, and the p-value serves to essentially test whether this difference is small enough to be attributed to random chance, or whether it can be said that wearing glasses actually had a significant difference on the results.

If the p-value is low, then the null hypothesis is said to be rejected, and it can be fairly said that, in this case, music does have a significant effect on test scores. Otherwise if the p-value is too high, the data is said to fail to reject the null hypothesis, meaning that it is not necessarily counter-evidence, but rather more results are needed. The standard and generally accepted p-value for experiments is <0.05, hence why all values below that number in the comic are marked "significant" at the least.

The chart labels a p-value of exactly 0.050 as "Oh crap. Redo calculations" because the p-value is very close to being considered significant, but isn't. The desperate researcher might be able to redo the calculations in order to nudge the result under 0.050. For example, problems can often have a number of slightly different and equally plausible methods of analysis, so by arbitrarily choosing one it can be easy to tweak the p-value. This could also be achieved if an error is found in the calculations or data set, or by erasing certain unwelcome data points. While correcting errors is usually valid, correcting only the errors that lead to unwelcome results is not. Plausible justifications can also be found for deleting certain data points, though again, only doing this to the unwelcome ones is invalid. All of these effectively introduce sampling bias into the reports.

The value of 0.050 demanding a "redo calculations" may also be a commentary on the precision of harder sciences, as the rest of the chart implicitly accepts any value following the described digit for a given description; if you get exactly 0.050, there's the possibility that you erred in your calculations, and thus the actual result may be either higher or lower.

Values between 0.051 and 0.06 are labelled as being "on the edge of significance". This illustrates the regular use of "creative language" to qualify significance in reports, as a flat "not significant" result may look 'bad'. The validity of such use is of course a contested topic, with debates centering on whether p-values slightly larger than the significance level should be noted as nearly significant or flatly classed as not-significant. The logic of having such an absolute cutoff point for significance may be questioned.

Values between 0.07 and 0.099 continue the trend of using qualifying language, calling the results "suggestive" or "relevant". This category also illustrates the 'technique' of resorting to adjusting the significance threshold. Appropriate experimental design requires that the significance threshold be set prior to the experiment, not allowing changes afterward in order to "get a better experiment report", as this would again insert bias into the result. A simple change of the threshold (e.g. from 0.05 to 0.1) can change an experiment's result from "not significant" to "significant". Although the statement "significant at the p<0.10 level" is technically true, it would be highly frowned upon to use in an actual report.

Values higher than 0.1 are usually considered not significant at all, however the comic suggests taking a part of the sample (a subgroup) and analyzing that subgroup without regard to the rest of the sample. Choosing to analyze a subgroup in advance for scientifically plausible reasons is good practice. For example, a drug to prevent heart attacks is likely to benefit men more than women, since men are more likely to have heart attacks. Choosing to focus on a subgroup after conducting an experiment may also be valid if there is a credible scientific justification - sometimes researchers learn something new from experiments. However, the danger is that it is usually possible to find and pick an arbitrary subgroup that happens to have a better p-value simply due to chance. A researcher reporting results for subgroups that have little scientific basis (the pill only benefits people with black hair, or only people who took it on a Wednesday, etc.) would clearly be "cheating." Even when the subgroup has a plausible scientific justification, skeptics will rightly be suspicious that the researcher might have considered numerous possible subgroups (men, older people, fat people, sedentary people, diabetes suffers, etc.) and only reported the subgroups for which there are statistically significant results. This is an example of the multiple comparisons problem, which is also the topic of 882: Significant.

If the results cannot be normally considered significant, the title text suggests as a last resort to invert p<0.050, making it p>0.050. This leaves the statement mathematically true, but may fool casual readers, as the single-character change may go unnoticed or be dismissed as a typographical error ("no one would claim their results aren't significant, they must mean p<0.050"). Of course, the statement on its face is useless, as it is equivalent to stating that the results are "not significant".

Transcript

- [A two-column table where the second column selects various areas of the first column using square brackets.]

-

- P-value

- Interpretation

- 0.001

- 0.01

- 0.02

- 0.03

- Highly Significant

-

- 0.04

- 0.049

- Significant

-

- 0.050

- Oh crap. Redo calculations.

-

- 0.051

- 0.06

- On the edge of significance

-

- 0.07

- 0.08

- 0.09

- 0.099

- Highly suggestive, relevant at the p<0.10 level

-

- ≥0.1

- Hey, look at this interesting subgroup analysis

Discussion

IMHO the current explanation is misleading. The p-value describes how well the experiment output fits hypothesis. The hypothesis can be that the experiment output is random. The low p-values point out that the experiment output fits well with behavior predicted by the hypothesis. The higher the p-value the more the observed and predicted values differ.Jkotek (talk) 08:54, 26 January 2015 (UTC)

- High p-values do not signify that the results differ from what was predicted, they simply indicate that there are not enough results for a conclusion. --108.162.230.113 20:13, 26 January 2015 (UTC)

I read this comic as a bit of a jab at either scientists or media commentators who want the experiments to show a particular result. As the significance decreases, first they re-do the calculations either in the hope that result might have been erroneous and would be re-classified as significant, or intentionally fudge the numbers to increase the significance. The next step is to start clutching at straws, admitting that while the result isn't Technically significant, it is very close to being significant. After that, changing the language to 'suggestive' may convince the general public that the result is actually more significant than it is, while also changing the parameters of the 'significance' value allows it to be classified as significant. Finally, they give up on the overall results, and start pointing out small sections which may by chance show some interesting features.

All of these subversive efforts could come about because of scientists who want their experiment to match their hypothesis, journalists who need a story, researchers who have to justify further funding etc etc. --Pudder (talk) 09:01, 26 January 2015 (UTC)

- I like how you have two separate categories - "scientists" and "researchers" with each having two different goals :) Nyq (talk) 10:12, 26 January 2015 (UTC)

- As a reporter, I can assure you that journalists are not redoing calculations on studies. Journalists are notorious for their innumeracy; the average reporter can barely figure the tip on her dinner check. Most of us don't know p-values from pea soup.108.162.216.78 16:44, 26 January 2015 (UTC)

- The press has at various times been guilty of championing useless drugs AND 'debunking' useful ones, but it's more to do with how information is presented to them than any particular statistical failing on their part. They can look up papers the same as anyone, but without a very solid understanding of the specific area of science there's no real way that a layman can determine if an experiment is flawed or valid or if results have been manipulated. Reporters (like anyone researching an area) at some point has to decide who to trust and who not to, and make up their own mind. It doesn't even matter if a reporter IS very scientifically literate, because the readers aren't and THEY have to take his word for it. Certainly reporters should be much more rigorous but there's more going on than just 'reporters need to take a stats class'. Journals and academics make the exact same mistakes too; skipping to the conclusion, getting exciting about breakthroughs that are too good to be true; and assuming that science and scientists are fundamentally trustworthy. And the answer isn't even that everyone involved should demand better proof, because that's exactly the problem we already have - What actually IS proof? Can you ever trust any research done by someone else? Can you even trust research that you were a part of? After all, any large sample group takes more than one person to implement and analyse, and your personal observations could easily not be representative of the whole. We love to talk about proof as being the beautifully objective thing, but in truth the only true proof comes after decades of work and studies across huge numbers of subjects which naturally never happens if the first test comes back negative, because no-one puts much effort into re-testing things that are 'false'. 01:29, 13 April 2015 (UTC)

This one resembles this interesting blog post very much.--141.101.96.222 13:26, 26 January 2015 (UTC)

STEN (talk) 13:33, 26 January 2015 (UTC)

- Heh. 173.245.56.189 20:06, 26 January 2015 (UTC)

See http://xkcd.com/882/ for using a subgroup to improve your p value. Sebastian --108.162.231.68 23:02, 26 January 2015 (UTC)

This comic may be ridiculing the arbitrariness of the .05 significance cutoff and alluding to the "new statistics" being discussed in psychology.[1]

108.162.219.163 23:06, 26 January 2015 (UTC)

The "redo calculations" part could just mean "redo calculations with more significant figures" (i.e. to see whether this 0.050 value is actually 0.0498 or 0.0503). --141.101.104.52 13:36, 28 January 2015 (UTC)

- Agreed. I first understood it as someone thinking that 0.05 is a "too round" value, and some calculations tend to raise suspicions when these values pop up. 188.114.99.189 21:28, 7 December 2015 (UTC)

- TL;DR

As someone who understands p values, IMO this explanation is way too technical. I really think the intro paragraph should have a short, simplified version that doesn't require any specialized vocabulary words except "p-value" itself. Then talk about controls, null hypothesis, etc, in later paragraphs. - Frankie (talk) 16:52, 28 January 2015 (UTC)

- That is nearly impossible. I'm using the American Statistical Association's definition that "Informally, a p-value is the probability under a specified statistical model that a statistical summary of the data (e.g., the sample mean difference between two compared groups) would be equal to or more extreme than its observed value" until a better one comes. That said, the difficulty of explaining p-value is no excuse to use the wrong interpretation of "probability that observed result is due to chance".--Troy0 (talk) 06:27, 24 July 2016 (UTC)

There's an irony in the use of hair colour as a suspect subgroup analysis... hair colour can factor in to studies. Ignoring the (probably wrong) common idea that red heads have a lower effectiveness rating for contraceptives, there do seem to be some suggestions that the recessive mutated gene does have implications beyond hair colour. Getting sunburn easily is one we all know, but how about painkiller and aesthetic efficiency? For example: http://healthland.time.com/2010/12/10/why-surgeons-dread-red-heads/ --141.101.99.84 09:15, 18 June 2015 (UTC)