1897: Self Driving

| Self Driving |

Title text: "Crowdsourced steering" doesn't sound quite as appealing as "self driving." |

Explanation[edit]

This comic references the approach of using CAPTCHA inputs to solve problems, particularly those involving image classification, specifically reCAPTCHA v2's fallback puzzle, and hCaptcha's puzzle, both of which are based on identifying road features and vehicles. A reCAPTCHA version of this puzzle would ask "check all squares containing a STOP SIGN" using one or more images derived from Google Street View.

Such an approach can serve to create the learning set as the basis for training an artificial intelligence (AI) to better recognize or respond to similar stimuli. This approach was used by Google, the owners of reCAPTCHA, to identify house numbers in Street View to improve their mapping, and nowadays Google also uses CAPTCHAs to identify vehicles, street signs and other objects in Street View pictures. This might be a reasonable way to help improve the performance of the AI in a self-driving car that responds to video input, by reviewing images it might encounter and flagging road signs, etc. that it should respond to. Later a similar approach to learning important things, for the robots, was used in 2228: Machine Learning Captcha.

However, the temptation might be to simply sidestep the hard problem of AI by having all instances 'solved' by "offloading [the] work onto random strangers" through CAPTCHAs. For example, this has been used to defeat CAPTCHAs themselves; people were asked to solve CAPTCHAs to unlock pornographic images in a computer game, while the solution for the CAPTCHA was relayed to a server belonging to cybercriminals. (See PC stripper helps spam to spread and Humans + porn = solved CAPTCHA).

Alarmingly, the developers of this 'self driving' car seem to have gone for the lazy approach. Instead of teaching an AI, the CAPTCHA answer is used in real time to check whether the "self-driving" car is about to arrive at an intersection with a stop sign. This information is pretty critical, as failing to mark the stop could cause an accident. The user is unlikely to respond to the CAPTCHA in time to avert disaster, not to mention that any interruption to the car's internet connection could prove fatal. Self driving cars have become a recurrent theme on xkcd.

The system depicted is a Wizard of Oz experiment (as is the "Mechanical Turk" which a popular crowdworking system is named after) whereas actual self-driving cars, to the extent that they can use reCAPTCHA-style human detection systems, would involve an asynchronous decision system. Other synchronous decision systems which actually exist are political voting and money as a token of the exchange value of trade.

The title text explains that this method could be called "crowdsourced steering", crowdsourcing meaning sending the data on the internet to let several users provide their ideas and input on a problem. People would naturally suspect that this is considerably less safe than a car which is actually capable of self-driving; if the internet can barely collectively steer a videogame character, what chance do they have steering an actual, physical vehicle?

This also suggests that Randall is a bit skeptical of the current stage of AI, as this doubts whether the AI technology really is working in the way that we expect. It also comments on how what we call 'progress' actually is putting our work onto other people.

As of November 2024, reCAPTCHA v2 is beaten.

Transcript[edit]

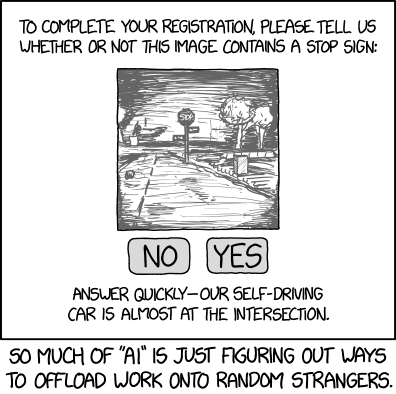

- [Inside a frame there is the following text above an image:]

- To complete your registration, please tell us whether or not this image contains a stop sign:

- [The square image is a drawing of a road leading up to a sign post with a hard to read word at the top part of the eight-sided sign. The sign also has two smaller signs left and right with unreadable text. The image is grayscale and of poor quality, but trees and other obstacles next to the road can be seen. Darkness around the edges of the image could indicate that it is night and the landscape is only lit up by a cars head lights.]

- Sign: Stop

- [Beneath the image there are two large gray buttons with a word in each:]

- No Yes

- [Beneath the buttons are the following text:]

- Answer quickly – our self-driving car is almost at the intersection.

- [Caption beneath the frame:]

- So much of "AI" is just figuring out ways to offload work onto random strangers.

Discussion

I think this is more a reference to various projects (like the ReCAPTCHA that protects this site) that use CAPTCHAs to digitise text and so on, by involuntarily crowdsourcing the typing out of the text by users trying to complete a login, rather than specifically about bots trying to circumvent anti-bot protection. It also brings to mind things like the Zooniverse projects, where volunteers contribute to the classification of astronomical bodies, identification of animals in game reserves, and so on, in that a computer is able to make a rough guess as to what the image is, but it takes a human intervention to make a reliable (and therefore useful) identification. Similarly, Google's (now discontinued) Picasa software had a 'People' function where it would attempt to guess who the people in your photos were - yet it would make so many false identifications, and make you go through saying 'Yes/No' to each of them, that it often felt as though you might just as well have classified them all yourself in the first place.162.158.155.32 10:33, 2 October 2017 (UTC)

The comic clearly references techniques like reCAPTCHA that trick (1) unsuspecting people into doing the real work for free while they think they are solving a captcha, and (2) users of the final product who think it was created by an AI (or at least an OCR) when it was done "by hand". The comic is neither about teaching AIs, nor voluntary collaborative projects. Zetfr 11:42, 2 October 2017 (UTC)

- I think the comic is about the borderlands between knowingly volunteering your time and unknowlingly supplying an AI with valuable information. When reading the caption my first thought was Google Translate, where the gamification / voluntary work is based on the texts submitted by a lot of unsuspecting users. When voluntarily contributing to the AI, I've been presented with some poor bloke's chat log, and another person's travel plans. 162.158.134.100 12:11, 2 October 2017 (UTC)

From what I have read, some image recognition AI projects use human input to refine their algorithms. Many AI algorithms also give probabilities of the correctness of the results. So in the domain of image recognition for self-driving cars it is conceivable that the computer would request human input to verify the interpretation of the scene. The comic is considering this possibility in a context that pokes fun at the field of AI in a rather scary real-world situation. Rtanenbaum (talk) 13:31, 2 October 2017 (UTC)

"From the creators of "Twitch Plays Pokemon" comes an all new reality series that'll blow you away! "Twitch Taxi!" Coming this Fall!" 162.158.62.153 13:38, 2 October 2017 (UTC)

- Twitch-driven car would crash in SECONDS. -- Hkmaly (talk) 03:52, 3 October 2017 (UTC)

- We need Twitch Plays Mario Kart. Right now. NealCruco (talk) 02:27, 4 October 2017 (UTC)

- Twitch plays GTA? (I'm talking about the original one from 1997. But the 3D versions could be... interesting, too.) Elektrizikekswerk (talk) 07:32, 4 October 2017 (UTC)

- We need Twitch Plays Mario Kart. Right now. NealCruco (talk) 02:27, 4 October 2017 (UTC)

It seems to me the person viewing the image and registering some product is not an occupent of the "self driving" car being referred to in the comic. Rather, the self driving car (possibly containing passengers) is dependent on some random stranger on the Internet responding (correctly) to the question about the stop sign. Maybe this is obvious but when I first glanced at the comic, my interpretation was the occupants of the vehicle were being asked for the information. But after thinking about it a bit, I believe that any passengers in the car are blissfully unaware of their situation, likely assuming the car doesn't depend on input from someone in the next 5 seconds or so. Not really sure how to word all this in the explanation. But it seems like a business model Black Hat would employ. 172.68.58.23 19:54, 2 October 2017 (UTC)Pat

- There has never been any doubt in my mind that this CAPTCHA is being answered by someone having no relation to this car, not the passenger. I figure it's of the quiet "Psst! Help me out with this!" type of interaction, that the passenger is supposed to have no idea that the car is getting input, so as not to panic about their safety in this "self"-driving car. NiceGuy1 (talk) 04:19, 13 October 2017 (UTC)

This is a https://en.wikipedia.org/wiki/Wizard_of_Oz_experiment whereas actual self-driving cars, to the extent that they can use Recapcha-like human detection systems, would produce an asynchronous decision system. Other synchronous decision systems which actually exist are political voting and money as a token of the exchange value of trade. 141.101.98.82 14:48, 3 October 2017 (UTC)

- I added a paragraph based on that comment to the explanation. 141.101.98.82 18:09, 3 October 2017 (UTC)

Does anyone else see a tornado in the distance off to left?

—jcz

162.158.186.12 22:05, 9 October 2017 (UTC)

- I suspect it's supposed to be a tree made blurry by being off to the side and caught by a crappy camera - same quality as a backup camera on a car - but it looks enough like it that I would consider it a possibility. Though I fail to see the point, since it isn't referenced and doesn't really relate to the rest of the comic. :) NiceGuy1 (talk) 04:19, 13 October 2017 (UTC)

Hold on, explainxkcd uses this sort of thing. 172.69.22.168 02:07, 6 July 2020 (UTC)

And now it seems to have become a real thing. Oops? 172.71.94.114 16:01, 8 November 2023 (UTC)

Those vehicles were supported by a vast operations staff, with 1.5 workers per vehicle. The workers intervened to assist the company’s vehicles every 2.5 to five miles, according to two people familiar with is operations.