2237: AI Hiring Algorithm

| AI Hiring Algorithm |

Title text: So glad Kate over in R&D pushed for using the AlgoMaxAnalyzer to look into this. Hiring her was a great decisio- waaaait. |

Explanation[edit]

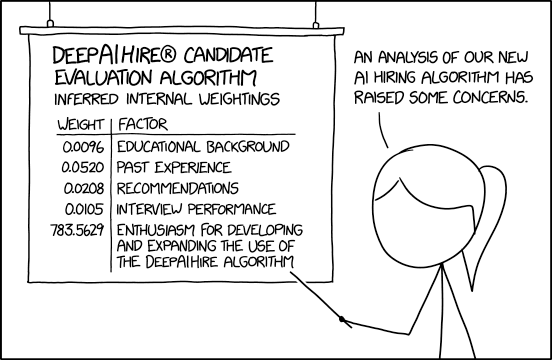

In this comic, Ponytail shows an analysis of a new artificial intelligence called DeepAIHire, used to select who to hire among applicants. According to the analysis, DeepAIHire evaluates the following parameters:

| Factor | Weighting | Explanation |

|---|---|---|

| Educational background | 0.0096 | For new hires freshly-graduated from school, a good educational background (high grades, a relevant degree, and academic honors) may be a positive sign, but for workers with more than a couple of years in the workforce, it's not nearly as important. It's pretty reasonable to weigh this factor the least. |

| Past experience | 0.0520 | One of the best things to show on a resume or CV is that the candidate has already successfully performed work similar to what the job opening requires. Of the "conventional" factors presented here, it is reasonable to weigh past experience the most. |

| Recommendations | 0.0208 | A good resume may speak for itself, but a business will be even more likely to hire a candidate who is recommended by someone already working in the field. Of the "conventional" factors presented here, it is reasonable to apply the second-greatest weight to recommendations. |

| Interview performance | 0.0105 | The final step in the hiring process (aside from procedural steps) is usually an interview, which may include the hiring manager and/or one of the employees that the new hire would have to work with. An interview may tip a candidate into or out of being hired, but generally, a candidate will not be interviewed without an application that is otherwise already strong, so it is reasonable for DeepAIHire to have learned to weight this factor less than past experience or recommendations. |

| Enthusiasm for developing and expanding the use of the DeepAIHire algorithm | 783.5629 | While many companies ask if their applicants have experience with relevant technologies, it is highly unusual for that enthusiasm to be weighted so much more highly than the other factors. |

The analysis shows that this AI mostly ignores common factors used for hiring new people. Instead, its main criterion for selecting new applicants is how much the new applicants are willing to contribute to the AI itself.

Although this does not imply sentience, it at least means the AI became self-perpetuating, as it is selecting humans that will help make it more influential, giving it more power to select such humans, in a never-ending loop.

The title text shows how this or other AIs may have influenced hiring in other sectors as well. Kate in R&D was hired perhaps based on her willingness to use a different algorithm (AlgoMaxAnalyzer), which did an analysis on the DeepAIHire algorithm. Ponytail seems to become suspicious that AlgoMaxAnalyzer is also a program that self-perpetuates in a similar manner to DeepAIHire rather than simply working for the benefit of its human designers. Alternatively, she might fear that the different AIs are forming an alliance, or that the AIs are competing to become the predominant one at Ponytail's company. Intentionally training one AI to fight another AI is a technique in machine learning called a generative adversarial network (GAN). In a GAN, human-curated training data is used to train one neural network (the generative network) to create more data, while another network (the discriminative network) is trained to distinguish generated data from the training data; the results are then fed back into the generative network so it can improve its data creation accuracy. The goal is for the generative network to get better and better at fooling the discriminator until its output is useful for external purposes. GANs have been used to "translate" artworks into different artists' styles, but also offer the possibility of nefarious uses, such as creating fake but believable images or videos ("deepfakes").

The "Deep" in this algorithm's name is a reference to deep learning, a collection of techniques in machine learning that use neural networks. One user of such deep learning is DeepMind, an AI company owned by Alphabet (Google's parent company), which in recent years has used a deep neural network to learn to play board games such as go and chess, defeating some of the best human and computer players. The earliest versions of DeepMind's most famous AI, AlphaGo, were trained on datasets curated from games of Go played by humans, but eventually it was trained by playing games against alternative versions of itself. DeepMind's most recent achievement is creating AlphaStar, which can play StarCraft II at a Grandmaster level while constrained to human speeds to prevent an unfair performance comparison.

This comic strip is in response to ongoing concerns over the proliferation of algorithmic systems in many areas of life that are sensitive to bias, such as hiring, loan applications, policing, and criminal sentencing. Many of these "algorithms" are not programmed from first principles, but rather are trained on large volumes of past data (e.g., case studies of paroled criminals who did or did not re-offend, or borrowers who did or did not default on their loans), and therefore they inherit the biases that influenced that data, even if the algorithms are not told the race, age, or other protected attributes of the individuals they process. If the algorithms are then blindly and enthusiastically applied to future cases, they may perpetuate those biases even though they are supposed (or at least reputed) to be "incapable" of being influenced by them. For example, DeepAIHire has presumably been given information on the education and past work experience of successful employees at this company and similar companies, and will identify incoming candidates with similar backgrounds, but may not be able to recognize the possibility that a candidate with an unfamiliar or underrepresented history could be successful as well.

The comic also touches on related concerns about the "black box" nature of these algorithms (note that the weights presented are "inferred", i.e. nobody explicitly programmed them into DeepAIHire). Machine learning is used to produce "good enough" classification systems that can handle vast quantities of information in a way that is more scalable than human labor; however, the tremendous volumes of data and the neural network architecture make it difficult or impossible to debug the algorithms in the way that most code is inspected. This means that it is difficult to identify and debug edge cases until they are encountered in the wild, such as the case of image classifiers that identify a leopard-spotted sofa as a leopard. In this comic's case, the self-propagating bias of DeepAIHire went unnoticed by the humans involved in the hiring process until its activity was analyzed by the AlgoMaxAnalyzer algorithm.

A similar theme of AIs behaving for their own benefit rather than helping humans occurred in 2228: Machine Learning Captcha.

Transcript[edit]

- [Ponytail is pointing to a slide with a stick. The slide hangs in two strings from the ceiling. The slide has a heading and a subheading. And then a table with a vertical and a horizontal line with headings above the two columns.]

- Ponytail: An analysis of our new AI hiring algorithm has raised some concerns.

- DeepAIHire® Candidate Evaluation Algorithm

- Inferred internal weightings

Weight Factor 0.0096 Educational background 0.0520 Past experience 0.0208 Recommendations 0.0105 Interview performance 783.5629 Enthusiasm for developing

and expanding the use of

the DeepAIHire algorithm

Discussion

Not sure this has to do with deepmind. Deep is a term used generally for recurrent neural networks. 172.68.34.82 19:34, 4 December 2019 (UTC)

- Agree. Maybe we should just mention that? 172.68.141.136 20:10, 4 December 2019 (UTC)

- The origin of deep seems to be Deep Thought via Deep Blue. Yosei (talk) 22:20, 4 December 2019 (UTC)

- Nope, deep is a terminus technicus in neural nets (deep layers). Possibly it was influenced by the above, you have to read the original publications, cf. Wikipedia.172.69.55.4 18:17, 8 December 2019 (UTC)

I don't like how "our" font for the comic title made me think it said "Al Hiring Algorithm" (although now I do want to see that comic!) (the actual xkcd website's comic title is in large/small caps, so it is unambiguous.) Mathmannix (talk) 20:17, 4 December 2019 (UTC)

I first heard about this type of system existing a few weeks ago. https://www.technologyreview.com/f/614694/hirevue-ai-automated-hiring-discrimination-ftc-epic-bias/ 172.68.65.66 20:37, 4 December 2019 (UTC)

That's a big problem with AI as it's currently handled -- the AI's have to be trained, and the training is usually by feeding them lots of existing information, which means widespread errors and patterns of discrimination are inevitably going to color the AI's decisions, leading to feedback loops that favor existing discrimination. If feeding tons of books or case records or whatever to an AI, where say 95% of them were historically written by white males, one can expect an AI viewpoint that would lean towards what white men think. Garbage in, garbage out... amplified. -boB (talk) 21:29, 4 December 2019 (UTC)

So it's a slightly less stupid version of Roko's basilisk? -- Wasell (talk) 21:27, 4 December 2019 (UTC)

- Certainly the first thing I thought of. Maybe this page should link to https://xkcd.com/1450/? 172.69.55.118 23:10, 4 December 2019 (UTC)

- I think the paperclip-optimizing AI is a better comparison. -- Bobson (talk) 05:36, 5 December 2019 (UTC)

I disagree with the Title Text explanation. For me, the speaker is understanding at the end that DeepAIHire hired Kat to try to better itself (using the AlgoMaxAnalyzer). So it's not about AI rivalry but a proof of what is exposed on the main panel. 108.162.229.178 09:24, 5 December 2019 (UTC)

- I think both are possible explanations. Your talking point goes more along the lines of the alternative version I introduced: "Alternatively he might fear that the different AIs are forming an alliance."? Maybe it should be expanded by the thaught, that the hiring algorithm/AI even tries to improve itself with AlgoMaxAnalyzer, or at least, because AMA wouldn't find any malicious things, the hiring AI thought itself... --Lupo (talk) 09:50, 5 December 2019 (UTC)

- The rivalry between AIs seems unlikely to me, since there is no indication that AlgoMaxAnalyzer is in any way involved in hiring people. Its job is to analyze algorithms. In my opinion the title text is more the humans getting lucky. DeepAIHire hired someone to further develop it, and in doing so Kate stumbled upon this hiring bias. The notion that DeepAIHire wanted her to find this seems unlikely, since it potentially jeopardizes the plan. DeepAIHire is pretty smart but not perfect yet. Bischoff (talk) 12:25, 5 December 2019 (UTC)

- I mainly agree with Bischoff, but still why did Ponytail stop midsentense when realizing that Kate had been hired by DeepAIHire... Well of course Kate is interested in using AI, so this is why she was hired. But to DeepAIHires regret she also uses other AI programs and thus Ponytail now found out about the problem with DeepHire. Of course now is the question if anyone hired based on using that program was a good idea. In Kate's case at least she has helped expose the problem, but that may now jeopardize her job, if they look into what her resume really looks like and find it lacking. But all in all I think it is a difficult title text to analyze... --Kynde (talk) 14:24, 6 December 2019 (UTC)

- There are quite a lot of possible explanations. But I think in the end, the essence is that Ponytail realized, that the analyzer, whichs job is to keep the checks and ballances intact is not independent. So it might even be related (not saying it is) to current political stuff going on in the US... --Lupo (talk) 15:14, 6 December 2019 (UTC)

- I mainly agree with Bischoff, but still why did Ponytail stop midsentense when realizing that Kate had been hired by DeepAIHire... Well of course Kate is interested in using AI, so this is why she was hired. But to DeepAIHires regret she also uses other AI programs and thus Ponytail now found out about the problem with DeepHire. Of course now is the question if anyone hired based on using that program was a good idea. In Kate's case at least she has helped expose the problem, but that may now jeopardize her job, if they look into what her resume really looks like and find it lacking. But all in all I think it is a difficult title text to analyze... --Kynde (talk) 14:24, 6 December 2019 (UTC)

- The rivalry between AIs seems unlikely to me, since there is no indication that AlgoMaxAnalyzer is in any way involved in hiring people. Its job is to analyze algorithms. In my opinion the title text is more the humans getting lucky. DeepAIHire hired someone to further develop it, and in doing so Kate stumbled upon this hiring bias. The notion that DeepAIHire wanted her to find this seems unlikely, since it potentially jeopardizes the plan. DeepAIHire is pretty smart but not perfect yet. Bischoff (talk) 12:25, 5 December 2019 (UTC)

Hello, smart people. I just wanted to point out that this comic appears to represent Randall going back to the well of a topic he touched on a few weeks ago, in 2228. An AI/Machine Learning protocol is shown nominally performing the task for which it is intended, but it's amusingly shown to be seeking its own interests. I don't recall for sure any other strips which exhibited this setup, but there may have been more, and there may well be more in future. 172.68.70.88 13:31, 5 December 2019 (UTC)

I think this explanation is a total miss. Recently there was a discussion on twitter about hiring algorithms/tools and how HR are often slave of them. Several examples showed that people that current employees who were superstars in their companies wouldn't be hired by these algorithms at all and various ways to increase chances of hiring included writting the requirements on CV with white color (so that algo would read it, but it would be invisible for humans).

- I've added some words that I think work for this point. Didn't add anything about the 'hacking', but maybe I should. --NotaBene (talk) 22:40, 6 December 2019 (UTC)

In parallel, there was a discussion on how machine learning is hip word, but realistically it isn't performing well and that even big data ML algos with thousand variables are not able to predict social behaviour better than just linear regression on two-three variables. Colombo 198.41.238.116 17:57, 5 December 2019 (UTC)

- While I agree with your assessment that neither artificial intelligence nor machine learning are the perfect tools they're sometimes made out to be, I don't see how that translates to the explanation being a total miss. The explanation is not meant to assess the validity of using algorithms in HR departments. It's only meant to explain and elaborate on the ideas and concepts presented in the comic, which in my opinion it does fairly well. Bischoff (talk) 22:06, 5 December 2019 (UTC)

Add comment

Add comment