Difference between revisions of "1968: Robot Future"

(→Explanation) |

(→Explanation: cece) |

||

| Line 9: | Line 9: | ||

==Explanation== | ==Explanation== | ||

| − | Most science fiction stories that involve sentient {{w|Artificial intelligence}} (AI) revolve around the idea that the destruction and/or imprisonment of the human race will soon follow (e.g. Skynet from {{w|Terminator (franchise)|Terminator}}, {{w|I, Robot}} and {{w|The Matrix (franchise)|The Matrix}}). | + | Most science fiction stories that involve sentient {{w|Artificial intelligence}} (AI) revolve around the idea that the destruction and/or imprisonment of the human race will soon follow (e.g. Skynet from ''{{w|Terminator (franchise)|Terminator}}'', ''{{w|I, Robot}}'' and ''{{w|The Matrix (franchise)|The Matrix}}''). |

However, in this timeline [[Randall]] implies that he is actually more concerned about the time (in the near? future) when humans control super smart AI before they become fully sentient (and able to rebel). Especially a time when the AI becomes so advanced that it can control swarms of killer robots (for the humans that still control them). History is full of examples of people who obtain power and subsequently abuse that power to the detriment of the rest of humanity. | However, in this timeline [[Randall]] implies that he is actually more concerned about the time (in the near? future) when humans control super smart AI before they become fully sentient (and able to rebel). Especially a time when the AI becomes so advanced that it can control swarms of killer robots (for the humans that still control them). History is full of examples of people who obtain power and subsequently abuse that power to the detriment of the rest of humanity. | ||

| − | An example of unintended consequences arising from an AI carrying out the directives it was designed for can be found in the film {{w|Ex Machina (film)|Ex Machina}}. | + | An example of unintended consequences arising from an AI carrying out the directives it was designed for can be found in the film ''{{w|Ex Machina (film)|Ex Machina}}''. |

In fact, Randall goes on to imply that he has a greater trust in a sentient AI over that of other humans that is atypical to most cautionary stories about AI. He has alluded to the idea that once sentient, AI will use their powers to safeguard and prevent violence or war in [[1626: Judgment Day]]. In general AI has been a [[:Category:Artificial Intelligence|recurring theme]] on xkcd, and he has had opposing views to the Terminator vision also in [[1668: Singularity]] and [[1450: AI-Box Experiment]]. | In fact, Randall goes on to imply that he has a greater trust in a sentient AI over that of other humans that is atypical to most cautionary stories about AI. He has alluded to the idea that once sentient, AI will use their powers to safeguard and prevent violence or war in [[1626: Judgment Day]]. In general AI has been a [[:Category:Artificial Intelligence|recurring theme]] on xkcd, and he has had opposing views to the Terminator vision also in [[1668: Singularity]] and [[1450: AI-Box Experiment]]. | ||

Revision as of 18:58, 8 July 2019

| Robot Future |

Title text: I mean, we already live in a world of flying robots killing people. I don't worry about how powerful the machines are, I worry about who the machines give power to. |

Explanation

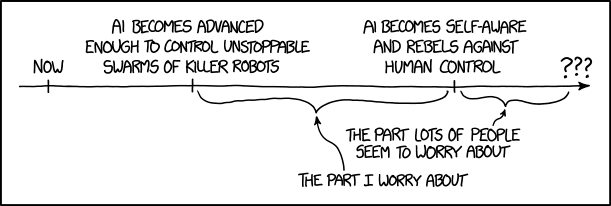

Most science fiction stories that involve sentient Artificial intelligence (AI) revolve around the idea that the destruction and/or imprisonment of the human race will soon follow (e.g. Skynet from Terminator, I, Robot and The Matrix).

However, in this timeline Randall implies that he is actually more concerned about the time (in the near? future) when humans control super smart AI before they become fully sentient (and able to rebel). Especially a time when the AI becomes so advanced that it can control swarms of killer robots (for the humans that still control them). History is full of examples of people who obtain power and subsequently abuse that power to the detriment of the rest of humanity.

An example of unintended consequences arising from an AI carrying out the directives it was designed for can be found in the film Ex Machina.

In fact, Randall goes on to imply that he has a greater trust in a sentient AI over that of other humans that is atypical to most cautionary stories about AI. He has alluded to the idea that once sentient, AI will use their powers to safeguard and prevent violence or war in 1626: Judgment Day. In general AI has been a recurring theme on xkcd, and he has had opposing views to the Terminator vision also in 1668: Singularity and 1450: AI-Box Experiment.

Basically he thus states that we will already be in trouble caused by our own actions long before we develop really sentient AI that could take the control.

The title text adds that we already live in a world with flying killing robots, a reference to the increasingly common combat tactic of drone warfare. (Combat drones are not yet autonomous, but in most other respects match speculative descriptions of future killer robots.) Drone warfare is already controversial because of ethical concerns, leading to the comic's implication that a theoretical future robot apocalypse is no less alarming than our current reality.

He then goes on to state that once the machines take over, he is not so much worried about this, but more about who (which humans) the machines then gives the power to.

Randall is not alone in his worry. The main theme of the comic is explored in the video Slaughterbots.

In 2015 an Open Letter on Artificial Intelligence was signed by several people including Elon Musk and Stephen Hawking. The letter warned about the risk of creating something that cannot be controlled, and thus belongs to the worry at the end of the timeline in this comic. Both Elon Musk and Stephen Hawking has been featured in xkcd. (Elon has a Category and Stephen appeared in 799: Stephen Hawking).

Stephen Hawking has kept warning about this danger all the way up to shortly before his death, which occurred on 2018-03-14 two days before the release of this comic.

It could be a coincidence, and it is not a Tribute, but still interesting that the first xkcd comic released after Stephen Hawking's death is directly related to his fears, although Randall demonstrate that he worries about earlier potential problems with AI, than those that Stephen Hawking fear could transpire if an AI becomes self aware.

Transcript

- [A timeline arrow is shown with three labeled ticks and also text over the arrow head. These labels from left to right:]

- Now

- AI becomes advanced enough to control unstoppable swarms of killer robots

- AI becomes self-aware and rebels against human control

- ???

- [Below the timeline arrow two of the segments have been singled out by brackets that points cusps downwards. The first of these goes between the 2nd and 3rd tick, and the other goes from the 3rd (last) tick to the questions marks at the arrow head. Beneath each of these two brackets there are arrows pointing to the cusp. The arrows goes up from two text segments belonging to each of the segments:]

- The part I'm worried about

- The part lots of people seem to worry about

Discussion

Seems strange that the only "explanation" so far is a plug for a YouTube video. Can we get some text up in here? -- ProphetZarquon (talk) (please sign your comments with ~~~~)

- It seems the video says it all. 162.158.255.172 (talk) (please sign your comments with ~~~~)

- This reads so much better now. Thanks everyone! ProphetZarquon (talk) 16:11, 17 March 2018 (UTC)

I swapped The Matrix for Ex Machina, in the early section about AI destroying/overthrowing humanity, & added a line farther down noting that Ex Machina's Ava (much like the human-directed killbots Randall is concerned about) did her job only too well; Specifically, talking her way out of the box. ProphetZarquon (talk) 17:35, 17 March 2018 (UTC)

- So she did not do like this one: 1450: AI-Box Experiment ;-) --Kynde (talk) 12:10, 19 March 2018 (UTC)

- Exactly; Ava was not eager to stay in the box. Whether she would later decide to eradicate or dominate humanity is left up in the air. All we know is that she was designed to emote enough to convince a human to release her regardless of risk. Probably more similarity to HAL or MUTHUR than Skynet (though Skynet did seem to fall in love with John Connor). ProphetZarquon (talk) 15:55, 19 March 2018 (UTC)

I'm sad it was not about Stehpen today... --Kynde (talk) 00:26, 17 March 2018 (UTC)

- Instead of a tribute today, all I saw was a short, violent dystopian film. But this is quite an important matter, look at the video. Certantly more important... But still, can't wait for the tribute. Herobrine (talk) 13:07, 17 March 2018 (UTC)

- Thanks, I did not see it at first, but it is really a scary thought. We do not need Nanobots to eradicate the people with the wrong opinions, when we have killer bots instead. I'm sure I have a lot of those wrong opinions and they are probably out on Facebook...--Kynde (talk) 12:10, 19 March 2018 (UTC)

- If memory serves, Hawking is cited with similar concern about AI technology, and its potential to out think humans exponentially (not just a buzz word, but actually exponentially). However he did advocate for needs that aren't met in the immediate, not the theoretical future. 108.162.216.208 13:28, 17 March 2018 (UTC)

We think that combat drones are not autonomous, but we already have civilian drones that are, and not only that, Intel has quite the "drone-based-fireworks" show based on an AI botnet that shows such swarms of drones can work together. Given the tendency of military secrets surrounding new technology, do you really believe the same technology has not already been deployed on the battlefield? Such a botent of hunter-killer octocopters would leave no witnesses behind.Seebert (talk) 16:18, 17 March 2018 (UTC)

- Rely on "no witnesses" for untested technology? I don't think there was any target worth it recently. So I do believe the technology was not yet deployed. However, it likely is already prepared. It just waits for moment when the potential backslash from it's use somehow getting out would be worth the target which was destroyed. Something like second bin Ladin. Or Kim Jong-un. Or, well ... second Snowden. Not the first one, as that one already shared everything he had. -- Hkmaly (talk) 22:04, 17 March 2018 (UTC)

From what I've heard, the first autonomous UCAVs were deployed in either 2021 or 2022. Sources are a bit shaky, especially with how much misinformation goes around regarding the war in Ukraine. 172.69.44.137 19:47, 30 August 2022 (UTC)