1671: Arcane Bullshit

| Arcane Bullshit |

Title text: Learning arcane bullshit from the 80s can break your computer, but if you're willing to wade through arcane bullshit from programmers in the 90s and 2000s, you can break everyone else's computers, too. |

Explanation[edit]

When fixing/improving an existing computer program, programmers sometimes need to read, understand, and improve old (and usually bad) code. The older a piece of code is, the less it tends to conform to modern programming practices, and the more likely it is to be "arcane bullshit" from the perspective of a 21st Century programmer.

Randall seems to feel that willingness to deal with "arcane bullshit" is a "Catch 22" that prevents 80s arcane bullshit from being fixed. Someone completely unwilling to deal with arcane bullshit would lack the patience to learn how to program. Someone extremely willing to 'wade through an 80s programmer's arcane bullshit' is likely to find themselves compiling kernels instead of making useful code. Compiling a Kernel is the act of converting human-readable source code into code which a computer can recognize and follow. Because this is a complicated process, the time it takes to do this can vary from 60 seconds to multiple hours when dealing with highly complex or sophisticated code. As such, this may prevent a programmer from making their own code as they are spending all their time compiling kernels rather than coding.

This comic could be a reference to changes in programming methodologies. As the first computer programs were written in the 40's and 50's they were prone to becoming "spaghetti code", where the flow of execution would jump from one part of the program to another using the JUMP which gives no state information. While this method of programming can work very quickly, it makes it difficult to predict program flow and can create interdependencies that are not obvious. In the BASIC language JUMP was called GOTO and the courses for new programmers argued that using GOTO in all but trivial cases was a very bad idea. On the other hand, old programmers argued that calculated GOTO was a sexy way of programming.

To combat the problem computer scientists have relied on increasing the levels of abstraction and encapsulation, by developing structured programming, procedural programming, and OOP (object oriented programming).

In structured programming you break your program into well defined blocks of code with specified entry and exit points. By the use of a stack (a portion of memory dedicated to sequentially storing and retrieving contextual information and program state as blocks call other blocks, before returning), it is possible to call a block of code and then have that block of code return control (and any new information) to the point that called it after it has done what was requested.

Very quickly it was decided to mark these blocks of code as functions or procedures, making it trivial for the compiler to know how to call and process the blocks, and make it easier for the user to edit them without having to keep track of the minutae of how they are handled. Languages that made this a focus include Pascal, Modula, and C.

Structured and procedural programming were well entrenched in the '80s. Most systems programming was done in mid- or low-level languages, which improves performance by giving the knowledgable programmer explicit control of the data structures in the programs rather than shrouding it in abstraction. But because they are at a lower level the code requires many explicit steps to do seemingly easy things like draw a box on a screen, making it easy for a non-experienced programmer to introduce errors and harder to understand what needs to be happening (ultimately, the flipping of specific bits within the graphical RAM), compared to a high-level command to just "draw a box" with given qualities and have the system work out how exactly that needs to be done.

Although the idea of OOP was around as early as the 1950s, it was not implemented in a widespread fashion until the 1990s. OOP encapsulates the data structures inside of functions, so rather than manipulate any variable directly you call the data structure and tell it to do something to (or with) its elements. This additional level of abstraction can make it a lot easier to work on varied data, if implemented with the correct handlers. It also can protect the program data from unexpected changes by other sections of the program, as most elements are restricted to being changed by the encapsulating code and transfer of information must be implemented in even higher levels of program management.

Because code in the '80s was typically done at a much lower level, it can be hard for programmers used to having the language and libraries silently do much of the work for them. It also meant that programmers would often hard-code expectations into their source code such as the number of files that can be opened at once or the size of the operating system disk buffers. This means if you need the program to handle a larger file, you might need to recompile it after finding and changing all the places in the code that assume the smaller max file size. For graphical output, rather than direct access to a predictably constant configuration of video-RAM, now the extent of the graphics (e.g. size of the 'screen'-array, bit-depth of each pixel, even the endianness of the data) should be discovered as the program loads, or even dynamically configurable while the program is running; such as when the program's GUI window is resized by the user, changing the available 'virtual screen' canvas.

As such, few people are willing to try to surpass the massive barrier to learning how to wrangle the very detailed old code. This group is on the left. To the right are people who have gotten so used to the tools and conventions of the '80s that they spend all of their time adjusting and recompiling the kernel of their computers to match their current needs, instead of actually creating new programs.

In the center is Cueball, presumably representing Randall, who has learned enough to change how the code operates but not enough for his changes to be produce a working fix for whatever emerging issue he might be trying to solve.

As programs age, they often lose support from the initial project head and die out, no longer supported on new computers. So, as the title text says, learning more coding from the '90s and after is necessary for also breaking everyone else's computers.

This could also be a comment on hacking and the advent of the internet and the technologies behind that (TCP/IP, HTML, CSS, PHP...) being '90s/2000s. Computers in the '80s were typically stand alone, so what you are learning can only be applied to your machine. To break everyone else's you need to be in the position of (mis)understanding networking code.

The title text might be a reference to various recently discovered security vulnerabilities in open-source software. In some cases, underskilled programmers have provided flawed code for critical infrastructure with very little review, resulting in global computer security disasters. Randall described some of these in 424: Security Holes (2008), 1353: Heartbleed and 1354: Heartbleed Explanation (2014). Other recent examples include Shellshock and vulnerabilities in the Linux kernel involving the perf and keyrings subsystems.

Transcript[edit]

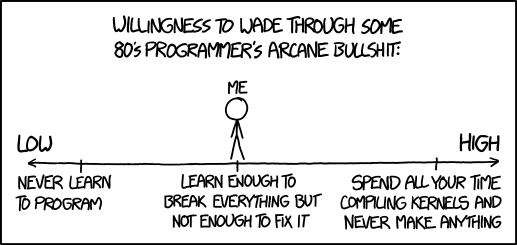

- [A horizontal graph with arrows pointing left and right with labels. The line has three ticks one towards each end and one in the middle above which Cueball is drawn. Below each tick there is a caption. There is a caption at the top of the panel:]

- Willingness to wade through some 80's programmer's arcane bullshit:

- [Left end:] Low

- [Left tick:] Never learn to program

- [Above Cueball:] Me

- [Center tick:] Learn enough to break everything but not enough to fix it

- [Right end:] High

- [Right tick:] Spend all your time compiling kernels and never make anything

Discussion

I was obsessively refreshing XKCD and the new comic popped up. Then I did the same on ExplainXKCD to make an explanation. Here's my first rough-draft attempt. Papayaman1000 (talk) 13:34, 22 April 2016 (UTC)

Your explanation confuses OOP with structured programming. Svorkoetter (talk) 15:03, 22 April 2016 (UTC)

Developing a kernel is not the same as compiling a kernel. You would, for example, rebuild a Linux kernel after you've added a module, or changed some parameters. Also, the purpose of object-oriented programming is not to solve the problem of spaghetti code. (That problem was solved by structured programming.) It's to enforce principles of abstraction, information hiding and modularity. Krishnanp (talk) 15:20, 22 April 2016 (UTC)

I modified the explanation on OOP to include Structured & Procedural language code and briefly described the 80's era of low level languages. Digital_Night (talk) 15:41, 22 April 2016 (UTC)

OK, I rewrote the kernel compiling explanation to explain why someone would recompile a 80's era kernel. Modular kernels sure are nice! Digital night (talk) 15:50, 22 April 2016 (UTC)

Could this be a reference to the large amount of open-source projects using C (an arcane bull* language from the 70s/80s that need 10000 lines ./configure scripts to work) ? 108.162.219.79 16:38, 22 April 2016 (UTC)

T.M.I. 162.158.222.231 18:54, 22 April 2016 (UTC)

I think this comic refers to keeping or fixing 30 over year old programs and their "bs" factor. At which the most extreme will be something like gentoo where you have to compile everything first before doing anything productive. (Sorry gentoo users didnt meant to start a flame war) 103.31.5.240 (talk) (please sign your comments with ~~~~)

- While installing applications on gentoo takes longer because it's being compiled, it's the time of the COMPUTER. You can do something else while it's compiling. -- Hkmaly (talk) 14:16, 23 April 2016 (UTC)

I'm afraid the explanation misses the point completely ... Rather than excursion to programming techniques and languages, the sociology behind that should be focused on. Programmers were considered mages (hence "arcane", or do I get the meaning of it wrong, not being native speaker?), and don't forget also that 80's were the time when the GNU project started. The title text then may refer to changing standards in (released) software quality - I remember my ZX Spectrum crashing because of overheating, but not because of software problems. And its system was written in assembler that is kinda badmouthed by the current version of the explanation, in favour of sophisticated languages. Then, with DOS, a problem emerged from time to time, but not a big deal. Then, with Windows 95, the system crashed daily ... Nowadays, programmers just throw their bullshit code on users, and break "everyone else's computer", also thanks to Internet etc. It has very little to do with programming language choice and jumps/gotos. - 141.101.95.123 06:58, 23 April 2016 (UTC)

- Agree, the "breaking everyone else's computer" is definitely about low code quality. It's true than programming with "goto" is harder, but maybe that was the reason only people who known how to program was doing it. Nowadays, everyone thinks he can program, but based on number of bugs it's obviously not true. -- Hkmaly (talk) 14:16, 23 April 2016 (UTC)

The current explanation completely misses the point and honestly should be taken down -- no offense to the original writer. Arcane BS here means "wizard-like stuff" in the sense of what programmers do which is different from what users would do. Where regular users just buy a computer and never open it, the arcane programmer might just actually open the computer and start swapping parts in and out, with or without a precise grasp on what s/he's doing. Same goes on the software part where a user might just run Windows in the 80s since it comes off-the-shelf and one never modifies it, whereas the arcane programmer might go through the effort of installing a UNIX-like system such as Minix and recompile the kernel to adjust parameters, add new modules, all of which involve complicated command lines that look like insane arcane magic to normal users. This is what is called "hacking" in the sense of the original meaning of the work "hacker" as you can find in The Jargon File and that work "hacking" really meant tinkering -- the word "cracker" was coined after misuse of the former by the media.

It was tinkering for the sole purpose of tinkering, which is why the comics says this accomplishes nothing. It is however an excellent way to learn how computers really work, something, again, that normal end-users don't care for, thus the "arcane" aspect.

Also note that in the 80's there was no Linux (the project started in 1991) and no GNU (the project started in the mid 80s with the manifesto but GNU had no kernel at first till it got combined with Linux to form the now-ubiquitous GNU/Linux.)

The tag line is easily explained: nowaday hacking (tinkering) on Linux is a common thing; the arcane hacking happens at the secops level. Ralfoide (talk) 17:02, 23 April 2016 (UTC)

The bullshit is a reference to the whole stack of all software. Back in the 1980's, it was still possible for completely new software architectures to be started. GNU, NT, BSD, X-Windows, NeXTSTEP, C++, all started in the 1980's, all still dominant in some way. And it is all bullshit. Slow, insecure, badly architected, and we can't fix it without breaking everything. Heck, Windows 10 is still releasing security updates for kernel vulnerabilities in its font renderer, and Linux is bloating from its multitude of new features and its No Breaking Applications rule. X-Windows has a whole lot of vestigial functionality that nobody uses anymore, and lacks functionality that its own maintainers want to use, but its successor Wayland is taking a long time to come into use.

BeOS showed what you could do for performance if you ignored backwards compatibility with backwards architectures, but it was too little, too late, and not designed for a networked world. Also, BeOS and its poorly funded open-source imitator Haiku are written in C++, and not C++14, at that.

Which brings me to the programming languages. C++ is deliberately obtuse so it can be compatible with programs written for previous versions of C++, even those written as if C++ were C with classes. C is known for having no type safety, no memory safety, no thread safety; so C++ also lacks those unless somebody imposes strict discipline on the programmer. Java, JavaScript, C#, PHP, Python, Perl, were all written as alternatives, rejecting some aspect of C++, but they all use the same ideas of modularity and execution as C++, and they are all implemented in some combination of C, C++, and assembly(!). To be fair, though, until a major organization made it a priority (Mozilla Rust or Google Go), there just has been no high-performance alternative to C++.

Since we all have limited time and money to deal with bullshit, we just keep using it. Decade 162.158.255.69 21:46, 23 April 2016 (UTC)

So, as someone who's taken like one course on Python ever, I'd like to offer my perspective on what I think your average "dumb person" wants to know. It's not the details of how programming in the 40's worked - all that does is reinforce the fact that it's "Arcane Bullshit", which is pretty obvious because every language is pretty close to arcane bullshit. It's more about why it's funny (or possible) to get break more things with a better understanding of code. The current explanation provides about two sentences on that - which helps ("okay, so you wouldn't even try if you're not familiar"), but I still feel like I'm missing something. Is it a programmer trope that people try to fix things and blow things up (like in XKCD 349)? 162.158.255.163 18:27, 24 April 2016 (UTC)

- Not REALLY, no. It's more that anyone who has programmed more than a little can quickly see how long and complex programs can get, especially if it was good enough and functional enough to still be around 30 years later to be getting such an update. Then, looking through the code like this, unless you programmed it in the first place, you likely would have to learn both the language AND this particular code at the same time. It's a monumental task that even an experienced and talented programmer could easily miss something (or get something wrong), at which point Murphy's Law takes over and makes sure the mistake turns out to be a doozy. :) Sometimes I've looked at programs i wrote myself 25 years ago, and it looks completely foreign to me, even though I wrote it myself, alone! - Niceguy1 108.162.218.47 03:58, 27 April 2016 (UTC) I finally signed up! This comment is mine. NiceGuy1 (talk) 09:49, 9 June 2017 (UTC)

As a bullshit programmer (55 years, FORTRAN) who loathes Java with a vengeance I'd like to point out that OOP merely relocated the bullshit to the classes interplay. Any program that doesn't have to navigate a rocket to the moon or suchlike I could write in FORTRAN with 10% of code and time. 162.158.202.142 20:42, 26 April 2016 (UTC)

This is a one panel comic. The explanation should not be thi long. Lackadaisical (talk) 13:39, 14 May 2016 (UTC)

I believe this is the last comic to use this much profanity. Correct me if I'm wrong.108.162.216.18 20:10, 29 November 2019 (UTC)

Honestly the first connection i made with "wade" and the 80s was ready player 1 108.162.245.65 16:47, 2 December 2021 (UTC)

me too

edit: i had to try twice because i forgot there was a captcha :( An user who has no account yet (talk) 13:38, 5 September 2023 (UTC)