Difference between revisions of "1958: Self-Driving Issues"

m (rv) |

(the comic specifically says "god") |

||

| (29 intermediate revisions by 20 users not shown) | |||

| Line 8: | Line 8: | ||

==Explanation== | ==Explanation== | ||

| − | |||

[[Cueball]] explains being worried about {{w|autonomous car|self-driving cars}}, noting that it may be possible to fool the sensory systems of the vehicles. This is a common concern with {{w|AI}}s; since they think analytically and have little to no capability for abstract thought, they can be fooled by things a human would immediately realize is deceptive. | [[Cueball]] explains being worried about {{w|autonomous car|self-driving cars}}, noting that it may be possible to fool the sensory systems of the vehicles. This is a common concern with {{w|AI}}s; since they think analytically and have little to no capability for abstract thought, they can be fooled by things a human would immediately realize is deceptive. | ||

| − | However, Cueball quickly assumes that his argument actually doesn't hold up when comparing AI drivers to human drivers, as both rely on the same guidance framework. Human drivers follow signs and road markings | + | However, Cueball quickly assumes that his argument actually doesn't hold up when comparing AI drivers to human drivers, as both rely on the same guidance framework. Human drivers follow signs and road markings and must obey the laws of the road just as an AI must. Therefore, an attack on the road infrastructure could impact both AIs and humans. However, humans and AIs are not equally vulnerable. For example, a fake sign or a fake child could appear to a human as an obvious fake but fool an AI. A [[Black Hat|creative attacker]] could put up a sign with CAPTCHA-like text that would be readable by humans but not by an AI. |

Cueball further wonders why, in this case, nobody tries to fool human drivers as they might try to fool an AI, but [[White Hat]] and [[Megan]] point out that most {{w|Road traffic safety|road safety systems}} benefit from humans not actively trying to maliciously sabotage them simply to cause accidents.{{Citation needed}} | Cueball further wonders why, in this case, nobody tries to fool human drivers as they might try to fool an AI, but [[White Hat]] and [[Megan]] point out that most {{w|Road traffic safety|road safety systems}} benefit from humans not actively trying to maliciously sabotage them simply to cause accidents.{{Citation needed}} | ||

| − | The title text continues the line of reasoning, noting that if most people did suddenly become murderers, the AI might be needed to be upgraded in order to deal with the presumable increase in people trying to cause car crashes by fooling the AI - a somewhat narrowly-focused solution given that a world full of murderers would probably have many more problems than that. As Megan sees humans as a 'component' of the road safety system, it might also be suggesting a firmware update for the buggy people who have all become murderers, one that would fix their murderous ways. We are not currently at a point where we can create and apply instantaneous firmware updates for large populations; even combining all the behavioral modification tools at our disposal -- {{w|psychiatry}}, {{w|cognitive behavioral therapy}}, {{w|hypnosis}}, {{w|mind-altering drugs}}, {{w|prison}}, {{w|CRISPR}}, etc. -- is not enough to perform such a massive undertaking, as far as we know. The update might be about the car's firmware since it can used to disable the brakes and thus causing or preventing many deaths. | + | The theme of human fear and overreaction to the advent of more or less autonomous robots also features in [[1955: Robots]]. Self-driving cars is a [[:Category:Self-driving cars|recurring subject]] on xkcd. A variation on the idea that humans are mentally "buggy" is suggested in [[258: Conspiracy Theories]], though in that case divine intervention is requested to implement the "firmware upgrade". |

| + | |||

| + | The title text continues the line of reasoning, noting that if most people did suddenly become murderers, the AI might be needed to be upgraded in order to deal with the presumable increase in people trying to cause car crashes by fooling the AI - a somewhat narrowly-focused solution given that a world full of murderers would probably have many more problems than that. As Megan sees humans as a 'component' of the road safety system, it might also be suggesting a firmware update for the buggy people who have all become murderers, one that would fix their murderous ways. We are not currently at a point where we can create and apply instantaneous firmware updates for large populations; even combining all the behavioral modification tools at our disposal -- {{w|psychiatry}}, {{w|cognitive behavioral therapy}}, {{w|hypnosis}}, {{w|mind-altering drugs}}, {{w|prison}}, {{w|CRISPR}}, etc. -- is not enough to perform such a massive undertaking, as far as we know. The update might be about the car's firmware since it can be used to disable the brakes and thus causing or preventing many deaths. | ||

==Transcript== | ==Transcript== | ||

| Line 34: | Line 35: | ||

==Trivia== | ==Trivia== | ||

| − | + | *In the original version of the comic, the title text originally misspelled "mu'''r'''derers" as "muderers". This was later fixed. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | This comic appeared one day after the Electronic Frontier Foundation co-released a report titled [https://www.eff.org/deeplinks/2018/02/malicious-use-artificial-intelligence-forecasting-prevention-and-mitigation The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation]. The report cites subversions and mitigations of AI such as ones used in self-driving cars. However, the report tends toward overly technical means of subversion. Randall spoofs the tenor of the report through his mundane subversions and over-the-top mitigations. | + | *This comic appeared one day after the Electronic Frontier Foundation co-released a report titled [https://www.eff.org/deeplinks/2018/02/malicious-use-artificial-intelligence-forecasting-prevention-and-mitigation The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation]. The report cites subversions and mitigations of AI such as ones used in self-driving cars. However, the report tends toward overly technical means of subversion. Randall spoofs the tenor of the report through his mundane subversions and over-the-top mitigations. |

{{comic discussion}} | {{comic discussion}} | ||

| Line 51: | Line 46: | ||

[[Category:Self-driving cars]] | [[Category:Self-driving cars]] | ||

[[Category:Sabotage]] | [[Category:Sabotage]] | ||

| + | [[Category:Comics edited after their publication]] | ||

Latest revision as of 13:20, 7 September 2025

| Self-Driving Issues |

Title text: If most people turn into murderers all of a sudden, we'll need to push out a firmware update or something. |

Explanation[edit]

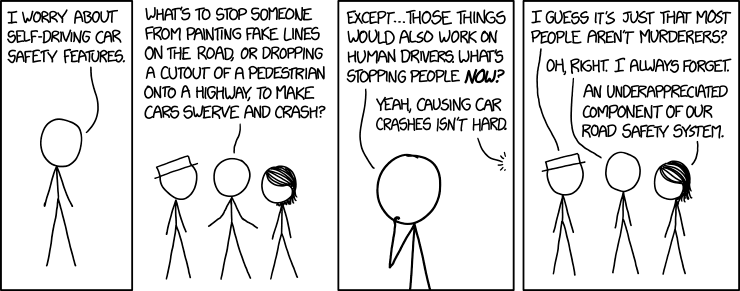

Cueball explains being worried about self-driving cars, noting that it may be possible to fool the sensory systems of the vehicles. This is a common concern with AIs; since they think analytically and have little to no capability for abstract thought, they can be fooled by things a human would immediately realize is deceptive.

However, Cueball quickly assumes that his argument actually doesn't hold up when comparing AI drivers to human drivers, as both rely on the same guidance framework. Human drivers follow signs and road markings and must obey the laws of the road just as an AI must. Therefore, an attack on the road infrastructure could impact both AIs and humans. However, humans and AIs are not equally vulnerable. For example, a fake sign or a fake child could appear to a human as an obvious fake but fool an AI. A creative attacker could put up a sign with CAPTCHA-like text that would be readable by humans but not by an AI.

Cueball further wonders why, in this case, nobody tries to fool human drivers as they might try to fool an AI, but White Hat and Megan point out that most road safety systems benefit from humans not actively trying to maliciously sabotage them simply to cause accidents.[citation needed]

The theme of human fear and overreaction to the advent of more or less autonomous robots also features in 1955: Robots. Self-driving cars is a recurring subject on xkcd. A variation on the idea that humans are mentally "buggy" is suggested in 258: Conspiracy Theories, though in that case divine intervention is requested to implement the "firmware upgrade".

The title text continues the line of reasoning, noting that if most people did suddenly become murderers, the AI might be needed to be upgraded in order to deal with the presumable increase in people trying to cause car crashes by fooling the AI - a somewhat narrowly-focused solution given that a world full of murderers would probably have many more problems than that. As Megan sees humans as a 'component' of the road safety system, it might also be suggesting a firmware update for the buggy people who have all become murderers, one that would fix their murderous ways. We are not currently at a point where we can create and apply instantaneous firmware updates for large populations; even combining all the behavioral modification tools at our disposal -- psychiatry, cognitive behavioral therapy, hypnosis, mind-altering drugs, prison, CRISPR, etc. -- is not enough to perform such a massive undertaking, as far as we know. The update might be about the car's firmware since it can be used to disable the brakes and thus causing or preventing many deaths.

Transcript[edit]

- [Cueball is speaking while standing alone in a slim panel.]

- Cueball: I worry about self-driving car safety features.

- [In a frame-less panel it turns out that Cueball is standing between White Hat and Megan, holding his arms out towards each of them, while he continues to speak.]

- Cueball: What's to stop someone from painting fake lines on the road, or dropping a cutout of a pedestrian onto a highway, to make cars swerve and crash?

- [Zoom in on Cueball's head as he continues to contemplate the situation holding a hand to his chin, while looking in White Hat's direction. Megan replies from off-panel behind him.]

- Cueball: Except... those things would also work on human drivers. What's stopping people now?

- Megan (off-panel): Yeah, causing car crashes isn't hard.

- [Zoom back out to show all three of them again.]

- White Hat: I guess it's just that most people aren't murderers?

- Cueball: Oh, right. I always forget.

- Megan: An underappreciated component of our road safety system.

Trivia[edit]

- In the original version of the comic, the title text originally misspelled "murderers" as "muderers". This was later fixed.

- This comic appeared one day after the Electronic Frontier Foundation co-released a report titled The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation. The report cites subversions and mitigations of AI such as ones used in self-driving cars. However, the report tends toward overly technical means of subversion. Randall spoofs the tenor of the report through his mundane subversions and over-the-top mitigations.

Discussion

I assume the off-panel speaker is Megan, based on their positioning, but not sure what the ruling on the ambiguity is. PvOberstein (talk) 05:47, 21 February 2018 (UTC)

I made a note about the typo in the title text. Also, weird question, does the "created by a BOT" tag mean that the explanation was written by an AI? Or is it a joke I'm missing for some reason? Sorry, kind of a dumb question I guess. 09:04, 21 February 2018 (UTC)

- Afaik the "created by a BOT" part is the default text when the bot which is crawling xkcd for a new comic inserts the comic here (and an empty explanation). In the past that part was often deleted when the first real edit was made. Some comics ago a habit evolved to actually change that line in relation to the comic at hand (e.g. "created by a SELF-DRIVING CAR" would be fitting here). Elektrizikekswerk (talk) 11:23, 21 February 2018 (UTC)

Could the firmware update be for the humans, because they are obviously malfunctioning in the scenario? Sebastian --162.158.90.30 09:46, 21 February 2018 (UTC)

- Of course, that's the joke. It would be really impractical to install such a firmware update, there are about 7.5 billion people on earth - many of which we don't even have access to. I'd also suspect that most people would fight back if you tried shoving a USB flash drive into them. 162.158.93.9 11:24, 21 February 2018 (UTC)

- Yeah, but how many of those 7.5 billion people are a safety risk for self-driving cars, though? LordHorst (talk) 12:59, 21 February 2018 (UTC)

- My best guess is 12. 162.158.93.9 14:50, 21 February 2018 (UTC)

- But you wouldn't use a flash drvie - it would be an OTA update.162.158.155.26 11:53, 22 February 2018 (UTC)

- Yeah, but how many of those 7.5 billion people are a safety risk for self-driving cars, though? LordHorst (talk) 12:59, 21 February 2018 (UTC)

- Funny, but now, as the explanation now states it is the cars that need to be updated to take this new behavior in to context. It is unclear if the cars should behave like humans, as Cueball mentions they already do, and if so should try to use the knowledge of human behavior to save life, or if they should behave like humans and try to take lives! :-D --Kynde (talk) 13:14, 21 February 2018 (UTC)

- While this version occurred to me, I feel confident that the INTENDED meaning is to go within the context of this comic, that is, that the murderers are the non-drivers Cueball is afraid of, and both human and AI drivers alike still would prefer not to crash (especially seeing as the most available murder victims would be the people in the car, both passengers and possible driver). Still, the car-owners-want-to-kill version is amusing. LOL! NiceGuy1 (talk) 05:33, 23 February 2018 (UTC)

- To me it's obvious, and the explanation overthinks it. The natural "voice" of the comic here is Cueball. He surmises that if people generally become murderers, they would need a firmware update "or something". Obviously he is taking "people" to be some sort of product, and the update to be the manufacturer's obligation. There is obvious religious precedent for this, whether the "firmware update" involves unleashing floods, burning cities with fire, sending messaiahs or revealing truths to prophets... and that is (imho) the joke: Cueball thinks an update is needed, then he generalises that to "or something" as he starts to realise (or as the reader starts to realise, despite Cueball's obliviousness) the enormity of the consequences of such a possible update. 108.162.229.166 03:42, 3 March 2018 (UTC)

- Agree with the interpretation, but I think floods, fire, etc. would be more 'retiring hardware' than a 'firmware update' 162.158.155.26 09:28, 5 March 2018 (UTC)

I would say that it's easier to fool current AI than human ... except in "quick reaction needed" scenario. If you throw cardboard cutout of a pedestrian on road, AI will be fooled because it's not able to recognize it's not human and crash. Human will crash as well, because while he will eventually realize it's cutout, it would be too late. -- Hkmaly (talk) 23:35, 21 February 2018 (UTC)

A creative attacker could put up a sign with CAPTCHA-like text that would be readable by humans but not by an AI.

Except that both humans and AIs would disregard it as not being a real sign. In fact, this would be more likely to be successful as an attack against humans, who might at least be distracted by going "what's that?", and end up crashing as a result. The AI would just completely ignore it.162.158.155.26 11:56, 22 February 2018 (UTC)

One of the most ingrained features in humans is to always drive on the correct side of the road

Seems like rather a big claim there... 162.158.155.26 15:03, 22 February 2018 (UTC)

It still says "muderers"[sic] ... maybe it isn't just a misspelling? 162.158.126.106 22:59, 11 March 2018 (UTC)

- One of my pet concerns regarding AI cars has been about those "repainted" lines often seen especially around construction areas. With a bit of light shining in the wrong direction, or some rain, as a human driver you need to be VERY aware about what is going on, and be able to disregard the appearance of those "ghost" lines being real. I am sure that any AI that has some quality does likewise, i.e., take multiple data points regarding what is really going on, not just the lines themselves. However, and to the point of these comments, I would suspect that, such lines being "so real", and therefore, an authentic risk, they probably would result in human drivers crashing. Does that happen? Are accident data searchable for this kind of things? Note that, if that were the case, there would be liability consequences, and the construction company involved should, probably, have to pay a bundle, following up that kind of chain of responsibility would be rather simple [citation needed], and, after this happens a few times, road construction companies would get the message, and be very careful about said ghost lines. But. Ghost lines still exists. Thus, either they pose no Real Life hazard, or showing liability is not so plain, or...

Some of our colleague commentards here seem to imply that AI would not be worse than humans, and that somehow humans are generally safe, and thus all is essentially OK?

With all due respect, humans are not safe drivers, not in any sense that would put a sane person's mind at ease regarding going out of the house, as accurately explained on [XKCD/1990]. What is going on, as has been pretty well established (see recent events, June 2020), is that we appear to have become inured to car accidents (inured: don't care). Some people have been pointing at this when trying to demonstrate that the covid-19 crisis is of no real import. Apparently (I personally don't know and only marginally care about any mathematical difference, in my mind both are terrible) the deaths from car accidents are significantly higher than those, at this date, by covid-19. Their argument being that we should not care so much about covid-19, because we don't (seem to) care about car accident deaths. My argument being, supported by the title text in XKCD/1990, and [this link in the comments there], that AI driven cars will win, not because they are that much safer, or the same as humans, but because courts and the general public will happily accept the resulting deaths as part of the way things are, as they do with human-caused road mayhem. Whatever. Anyway, as to the State of the Art in 2018, AI cars still get fiercely confused by rain and snow [see pictures and comment here], which would explain why so much testing has moved to Arizona... Yamaplos (talk) 13:55, 14 June 2020 (UTC)

Add comment

Add comment

- One of my pet concerns regarding AI cars has been about those "repainted" lines often seen especially around construction areas. With a bit of light shining in the wrong direction, or some rain, as a human driver you need to be VERY aware about what is going on, and be able to disregard the appearance of those "ghost" lines being real. I am sure that any AI that has some quality does likewise, i.e., take multiple data points regarding what is really going on, not just the lines themselves. However, and to the point of these comments, I would suspect that, such lines being "so real", and therefore, an authentic risk, they probably would result in human drivers crashing. Does that happen? Are accident data searchable for this kind of things? Note that, if that were the case, there would be liability consequences, and the construction company involved should, probably, have to pay a bundle, following up that kind of chain of responsibility would be rather simple [citation needed], and, after this happens a few times, road construction companies would get the message, and be very careful about said ghost lines. But. Ghost lines still exists. Thus, either they pose no Real Life hazard, or showing liability is not so plain, or...