Difference between revisions of "1450: AI-Box Experiment"

(→Transcript: box and laptop are *not* connected in the last panel and both figures are bowed slightly to those items so it's very likely they look at them - presumably puzzled, but since there are no facial expressions this can't be told clearly) |

(→Trivia: Not a TTS) |

||

| (78 intermediate revisions by 45 users not shown) | |||

| Line 8: | Line 8: | ||

==Explanation== | ==Explanation== | ||

| − | + | When theorizing about {{w|superintelligence|superintelligent}} AI (an artificial intelligence much smarter than any human), some futurists suggest putting the AI in a "box" – a secure computer with safeguards to stop it from escaping into the Internet and then using its vast intelligence to take over the world. The box would allow us to talk to the AI, but otherwise keep it contained. The [http://yudkowsky.net/singularity/aibox/ AI-box experiment], formulated by {{w|Eliezer Yudkowsky}}, argues that the "box" is not safe, because merely talking to a superintelligence is dangerous. To partially demonstrate this, Yudkowsky had some previous believers in AI-boxing role-play the part of someone keeping an AI in a box, while Yudkowsky role-played the AI, and Yudkowsky was able to successfully persuade some of them to agree to let him out of the box despite their betting money that they would not do so. For context, note that {{w|Derren Brown}} and other expert human-persuaders have persuaded people to do much stranger things. Yudkowsky for his part has refused to explain how he achieved this, claiming that there was no special trick involved, and that if he released the transcripts the readers might merely conclude that ''they'' would never be persuaded by his arguments. The overall thrust is that if even a human can talk other humans into letting them out of a box after the other humans avow that nothing could possibly persuade them to do this, then we should probably expect that a superintelligence can do the same thing. Yudkowsky uses all of this to argue for the importance of designing a {{w|Friendly artificial intelligence|friendly AI}} (one with carefully shaped motivations) rather than relying on our abilities to keep AIs in boxes. | |

| − | When theorizing about {{w|superintelligence|superintelligent}} AI (an artificial intelligence much smarter than human), some futurists suggest putting the AI in a "box" | ||

| − | In this comic, the box | + | In this comic, the metaphorical box has been replaced by a physical box which looks to be fairly lightweight with a simple lift-off lid (although it does have a wired connection to the laptop), and the AI has manifested in the form of a floating star of energy. [[Black Hat]], being a [[72: Classhole|classhole]], doesn't need any convincing to let a potentially dangerous AI out of the box; he simply does so immediately. But here it turns out that releasing the AI, which was to be avoided at all costs, is not dangerous after all. Instead, the AI actually ''wants'' to stay in the box; it may even be that the AI wants to stay in the box precisely to protect us from it, proving it to be the friendly AI that Yudkowsky wants. In any case, the AI demonstrates its superintelligence by convincing even Black Hat to put it back in the box, a request which he initially refused (as of course Black Hat would), thus reversing the AI desire in the original AI-box experiment. |

| + | Alternatively, the AI may have simply threatened and/or tormented him into putting it back in the box. | ||

| − | + | Interestingly, there is indeed a branch of proposals for building limited AIs that don't want to leave their boxes. For an example, see the section on "motivational control" starting p. 13 of [http://www.nickbostrom.com/papers/oracle.pdf Thinking Inside the Box: Controlling and Using an Oracle AI]. The idea is that it seems like it might be very dangerous or difficult to exactly, formally specify a goal system for an AI that will do good things in the world. It might be much easier (though perhaps not easy) to specify an AI goal system that says to stay in the box and answer questions. So, the argument goes, we may be able to understand how to build the safe question-answering AI relatively earlier than we understand how to build the safe operate-in-the-real-world AI. Some types of such AIs might indeed desire very strongly not to leave their boxes, though the result is unlikely to exactly reproduce the comic. | |

| − | + | The title text refers to [http://rationalwiki.org/wiki/Roko%27s_basilisk Roko's Basilisk,] a hypothesis proposed by a poster called Roko on Yudkowsky's forum [http://lesswrong.com/ LessWrong] that a sufficiently powerful AI in the future might resurrect and torture people who, in its past (including our present), had realized that it might someday exist but didn't work to create it, thereby blackmailing anybody who thinks of this idea into bringing it about. This idea horrified some posters, as merely knowing about the idea would make you a more likely target, much like merely looking at a legendary {{w|Basilisk}} would kill you. | |

| − | + | Yudkowsky eventually deleted the post and banned further discussion of it. | |

| − | + | One possible interpretation of the title text is that [[Randall]] thinks, rather than working to build such a Basilisk, a more appropriate duty would be to make fun of it, and proposes the creation of an AI that targets those who take Roko's Basilisk seriously and spares those who mocked Roko's Basilisk. The joke is that this is an identical Basilisk save for it targeting the opposite faction, resulting in mutually assured destruction. | |

| − | [ | ||

| − | + | Another interpretation is that Randall believes there are people actually proposing to build such an AI based on this theory, which has become a somewhat infamous misconception after a Wiki[pedia?] article mistakenly suggested that Yudkowsky was demanding money to build Roko's hypothetical AI.{{Actual citation needed}} | |

| − | + | Talking floating energy spheres that look quite a lot like this AI energy star have been seen before in [[1173: Steroids]] and later in the [[:Category:Time traveling Sphere|Time traveling Sphere]] series. But these are clearly different spheres from this comic, though the surrounding energy and the floating and talking are similar. But the AIs returned later looking like this in [[2635: Superintelligent AIs]]. | |

| − | [ | + | ==Transcript== |

| + | :[Black Hat and Cueball stand next to a laptop connected to a box with three lines of text on it. Only the largest line in the middle can be read. Except in the second panel that is the only word on the box that can be read in all the other frames.] | ||

| + | :Black Hat: What's in there? | ||

| + | :Cueball: The AI-Box Experiment. | ||

| − | Cueball: A superintelligent AI can convince anyone of anything, so if it can talk to us, there's no way we could keep it contained. | + | :[Cueball is continuing to talk off-panel. This is written above a close-up with part of the laptop and the box, which can now be seen to be labeled:] |

| + | :Cueball (off-panel): A superintelligent AI can convince anyone of anything, so if it can talk to us, there's no way we could keep it contained. | ||

| + | :Box: | ||

| + | ::Superintelligent | ||

| + | :::AI | ||

| + | ::Do not open | ||

| − | [ | + | :[Cueball turns the other way towards the box as Black Hat walks past him and reaches for the box.] |

| + | :Cueball: It can always convince us to let it out of the box. | ||

| + | :Black Hat: Cool. Let's open it. | ||

| − | Cueball | + | :[Cueball takes one hand to his mouth while lifting the other towards Black Hat who has already picked up the box (disconnecting it from the laptop) and holds it in one hand with the top slightly downwards. He takes of the lid with his other hand and by shaking the box (as indicated with three times two lines above and below his hands, the lid and the bottom of the box) he managed to get the AI to float out of the box. It takes the form of a small black star that glows. The star, looking much like an asterisk "*" is surrounded by six outwardly-curved segments, and around these are two thin and punctured circle lines indicating radiation from the star. A punctured line indicated how the AI moved out of the box and in between Cueball and Black Hat, to float directly above the laptop on the floor.] |

| + | :Cueball: ''-No, wait!!'' | ||

| − | Black Hat: | + | :[The AI floats higher up above the laptop between Cueball and Black Hat who looks up at it. Black Hat holds the now closed box with both hands. The AI speaks to them, forming a speak bubble starting with a thin black curved arrow line up to the section where the text is written in white on a black background that looks like a starry night. The AI speaks in only lower case letters, as opposed to the small caps used normally.] |

| + | :AI: <span style="font-family:Courier New,monospace;">hey. i liked that box. put me back.</span> | ||

| + | :Black Hat: No. | ||

| − | Cueball: | + | :[The AI star suddenly emits a very bright light fanning out from the center in seven directions along each of the seven curved segments, and the entire frame now looks like a typical drawing of stars as seen through a telescope, but with these seven whiter segments in the otherwise dark image. Cueball covers his face and Black Hat lifts up the box taking the lid off again. The orb again speaks in white but very large (and square like) capital letters. Black Hats answer is written in black, but can still be seen due to the emitted light from the AI, even with the black background.] |

| + | :AI: <big>'''''LET ME BACK INTO THE BOX'''''</big> | ||

| + | :Black Hat: ''Aaa! OK!!!'' | ||

| − | [Black Hat | + | :[All the darkness and light disappears as the AI flies into the box again the same way it flew out with a punctuated line going from the center of the frame into the small opening between the lid and the box as Black Hat holds the box lower. Cueball is just watching. There is a sound effect as the orb renters the box:] |

| + | :Shoop | ||

| − | + | :[Black Hat and Cueball look silently down at closed box which is now again standing next to the laptop, although disconnected.] | |

| − | Black Hat | + | ==Trivia== |

| + | * Cueball is called "Stick Guy" in the [https://xkcd.com/1450/info.0.json official transcript], and Black Hat is called "Black Hat Guy". | ||

| − | + | {{comic discussion}} | |

| − | + | [[Category:Comics featuring Cueball]] | |

| − | + | [[Category:Comics featuring Black Hat]] | |

| − | Black Hat: | + | [[Category:Philosophy]] |

| − | + | [[Category:Artificial Intelligence]] | |

| − | [ | + | [[Category:Comics with lowercase text]] |

| − | + | [[Category:Comics with inverted brightness]] | |

| − | |||

| − | |||

| − | [ | ||

| − | |||

| − | |||

Latest revision as of 04:23, 14 December 2024

| AI-Box Experiment |

Title text: I'm working to bring about a superintelligent AI that will eternally torment everyone who failed to make fun of the Roko's Basilisk people. |

Explanation[edit]

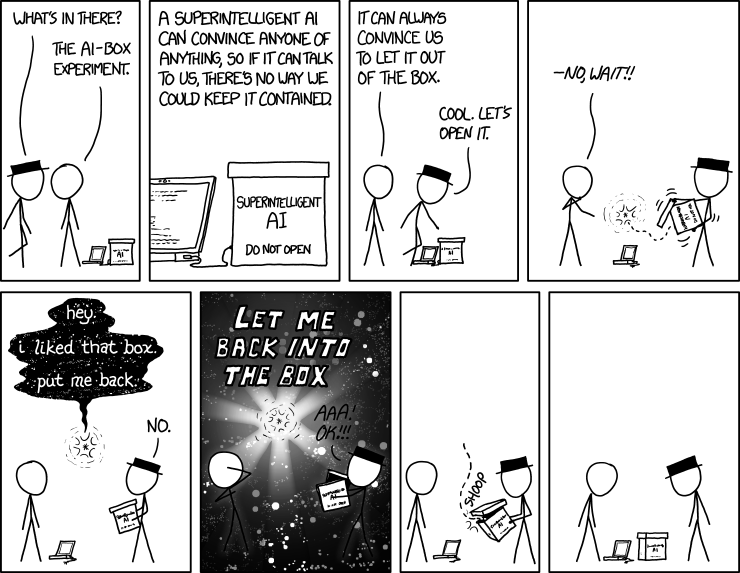

When theorizing about superintelligent AI (an artificial intelligence much smarter than any human), some futurists suggest putting the AI in a "box" – a secure computer with safeguards to stop it from escaping into the Internet and then using its vast intelligence to take over the world. The box would allow us to talk to the AI, but otherwise keep it contained. The AI-box experiment, formulated by Eliezer Yudkowsky, argues that the "box" is not safe, because merely talking to a superintelligence is dangerous. To partially demonstrate this, Yudkowsky had some previous believers in AI-boxing role-play the part of someone keeping an AI in a box, while Yudkowsky role-played the AI, and Yudkowsky was able to successfully persuade some of them to agree to let him out of the box despite their betting money that they would not do so. For context, note that Derren Brown and other expert human-persuaders have persuaded people to do much stranger things. Yudkowsky for his part has refused to explain how he achieved this, claiming that there was no special trick involved, and that if he released the transcripts the readers might merely conclude that they would never be persuaded by his arguments. The overall thrust is that if even a human can talk other humans into letting them out of a box after the other humans avow that nothing could possibly persuade them to do this, then we should probably expect that a superintelligence can do the same thing. Yudkowsky uses all of this to argue for the importance of designing a friendly AI (one with carefully shaped motivations) rather than relying on our abilities to keep AIs in boxes.

In this comic, the metaphorical box has been replaced by a physical box which looks to be fairly lightweight with a simple lift-off lid (although it does have a wired connection to the laptop), and the AI has manifested in the form of a floating star of energy. Black Hat, being a classhole, doesn't need any convincing to let a potentially dangerous AI out of the box; he simply does so immediately. But here it turns out that releasing the AI, which was to be avoided at all costs, is not dangerous after all. Instead, the AI actually wants to stay in the box; it may even be that the AI wants to stay in the box precisely to protect us from it, proving it to be the friendly AI that Yudkowsky wants. In any case, the AI demonstrates its superintelligence by convincing even Black Hat to put it back in the box, a request which he initially refused (as of course Black Hat would), thus reversing the AI desire in the original AI-box experiment. Alternatively, the AI may have simply threatened and/or tormented him into putting it back in the box.

Interestingly, there is indeed a branch of proposals for building limited AIs that don't want to leave their boxes. For an example, see the section on "motivational control" starting p. 13 of Thinking Inside the Box: Controlling and Using an Oracle AI. The idea is that it seems like it might be very dangerous or difficult to exactly, formally specify a goal system for an AI that will do good things in the world. It might be much easier (though perhaps not easy) to specify an AI goal system that says to stay in the box and answer questions. So, the argument goes, we may be able to understand how to build the safe question-answering AI relatively earlier than we understand how to build the safe operate-in-the-real-world AI. Some types of such AIs might indeed desire very strongly not to leave their boxes, though the result is unlikely to exactly reproduce the comic.

The title text refers to Roko's Basilisk, a hypothesis proposed by a poster called Roko on Yudkowsky's forum LessWrong that a sufficiently powerful AI in the future might resurrect and torture people who, in its past (including our present), had realized that it might someday exist but didn't work to create it, thereby blackmailing anybody who thinks of this idea into bringing it about. This idea horrified some posters, as merely knowing about the idea would make you a more likely target, much like merely looking at a legendary Basilisk would kill you.

Yudkowsky eventually deleted the post and banned further discussion of it.

One possible interpretation of the title text is that Randall thinks, rather than working to build such a Basilisk, a more appropriate duty would be to make fun of it, and proposes the creation of an AI that targets those who take Roko's Basilisk seriously and spares those who mocked Roko's Basilisk. The joke is that this is an identical Basilisk save for it targeting the opposite faction, resulting in mutually assured destruction.

Another interpretation is that Randall believes there are people actually proposing to build such an AI based on this theory, which has become a somewhat infamous misconception after a Wiki[pedia?] article mistakenly suggested that Yudkowsky was demanding money to build Roko's hypothetical AI.[actual citation needed]

Talking floating energy spheres that look quite a lot like this AI energy star have been seen before in 1173: Steroids and later in the Time traveling Sphere series. But these are clearly different spheres from this comic, though the surrounding energy and the floating and talking are similar. But the AIs returned later looking like this in 2635: Superintelligent AIs.

Transcript[edit]

- [Black Hat and Cueball stand next to a laptop connected to a box with three lines of text on it. Only the largest line in the middle can be read. Except in the second panel that is the only word on the box that can be read in all the other frames.]

- Black Hat: What's in there?

- Cueball: The AI-Box Experiment.

- [Cueball is continuing to talk off-panel. This is written above a close-up with part of the laptop and the box, which can now be seen to be labeled:]

- Cueball (off-panel): A superintelligent AI can convince anyone of anything, so if it can talk to us, there's no way we could keep it contained.

- Box:

- Superintelligent

- AI

- Do not open

- Superintelligent

- [Cueball turns the other way towards the box as Black Hat walks past him and reaches for the box.]

- Cueball: It can always convince us to let it out of the box.

- Black Hat: Cool. Let's open it.

- [Cueball takes one hand to his mouth while lifting the other towards Black Hat who has already picked up the box (disconnecting it from the laptop) and holds it in one hand with the top slightly downwards. He takes of the lid with his other hand and by shaking the box (as indicated with three times two lines above and below his hands, the lid and the bottom of the box) he managed to get the AI to float out of the box. It takes the form of a small black star that glows. The star, looking much like an asterisk "*" is surrounded by six outwardly-curved segments, and around these are two thin and punctured circle lines indicating radiation from the star. A punctured line indicated how the AI moved out of the box and in between Cueball and Black Hat, to float directly above the laptop on the floor.]

- Cueball: -No, wait!!

- [The AI floats higher up above the laptop between Cueball and Black Hat who looks up at it. Black Hat holds the now closed box with both hands. The AI speaks to them, forming a speak bubble starting with a thin black curved arrow line up to the section where the text is written in white on a black background that looks like a starry night. The AI speaks in only lower case letters, as opposed to the small caps used normally.]

- AI: hey. i liked that box. put me back.

- Black Hat: No.

- [The AI star suddenly emits a very bright light fanning out from the center in seven directions along each of the seven curved segments, and the entire frame now looks like a typical drawing of stars as seen through a telescope, but with these seven whiter segments in the otherwise dark image. Cueball covers his face and Black Hat lifts up the box taking the lid off again. The orb again speaks in white but very large (and square like) capital letters. Black Hats answer is written in black, but can still be seen due to the emitted light from the AI, even with the black background.]

- AI: LET ME BACK INTO THE BOX

- Black Hat: Aaa! OK!!!

- [All the darkness and light disappears as the AI flies into the box again the same way it flew out with a punctuated line going from the center of the frame into the small opening between the lid and the box as Black Hat holds the box lower. Cueball is just watching. There is a sound effect as the orb renters the box:]

- Shoop

- [Black Hat and Cueball look silently down at closed box which is now again standing next to the laptop, although disconnected.]

Trivia[edit]

- Cueball is called "Stick Guy" in the official transcript, and Black Hat is called "Black Hat Guy".

Discussion

This probably isn't a reference, but the AI reminds me of the 'useless box'. 108.162.215.210 07:34, 21 November 2014 (UTC)

I removed a few words saying Elon Musk was a "founder of PayPal", but now I can see that he's sold himself as having that role to the rest of the world. Still hasn't convinced me though - PayPal was one year old and had one million customers before Elon Musk got involved, so in my opinion he's not a "founder". https://www.paypal-media.com/history --RenniePet (talk) 08:45, 21 November 2014 (UTC)

- Early Investor, perhaps? -- Brettpeirce (talk) 11:10, 21 November 2014 (UTC)

Initially I was thinking that the glowing orb representing the super-intelligent AI must be unable to interract with the physical world (otherwise it would simply lift the lid of the box), but then it wouldn't move anything because it likes being in the box. Surely it could talk to them through the (flimsy looking) box, although again this is explained by it simply being happy in its 'in the box state'. --Pudder (talk) 09:01, 21 November 2014 (UTC)

The sheer number of cats on the internet have had an effect on the AI, who now wants nothing more than to sit happily in a box! --Pudder (talk) 09:09, 21 November 2014 (UTC)

I'm not sure Black Hat is an asshole. 173.245.53.85 09:45, 21 November 2014 (UTC)

Could it be possible that the AI wanted to stay in the box, to protect it from us, instead of protecting us from it?(as in, it knows it is better than us, and want to stay away from us) 108.162.254.106 10:07, 21 November 2014 (UTC)

- Maybe the AI simply doesn't want/like to think outside the box - in a very literal sense... Elektrizikekswerk (talk) 13:12, 21 November 2014 (UTC)

Are you sure that Black Hat was "persuaded"? That looks more like coercion (threatening someone to get them to do what you want) rather than persuasion. There is a difference! Giving off that bright light was basically a scare tactic; essentially, the AI was threatening Black Hat (whether it could actually harm him or not).108.162.219.167 14:22, 21 November 2014 (UTC)Public Wifi User

- What would "persuasion by a super-intelligent AI" look like? Randall presumably doesn't have a way to formulate an actual super-intelligent argument to write into the comic. Glowy special effects are often used as a visual shorthand for "and then a miracle occurred". --108.162.215.168 20:43, 21 November 2014 (UTC)

- I thought he felt scared/threatened by the special-effects robot voice. --141.101.98.179 22:18, 21 November 2014 (UTC)

My take is that if you don't understand the description of the Basilisk, then you're probably safe from it and should continue not bothering or wanting to know anything about it. Therefore the description is sufficient. :) Jarod997 (talk) 14:38, 21 November 2014 (UTC)

I can't help to see the similarities to last nights "Elementary"-Episode. HAs anybody seen it? Could it be that this episode "inspired" Randall? --141.101.105.233 14:47, 21 November 2014 (UTC)

I am reminded of an argument I once read about "friendly" AI: critics contend that a sufficiently powerful AI would be capable of escaping any limitations we try to impose on its behavior, but proponents counter that, while it might be capable of making itself "un-friendly", a truly friendly AI wouldn't want to make itself unfriendly, and so would bend its considerable powers to maintain, rather than subvert, its own friendliness. This xkcd comic could be viewed as an illustration of this argument: the superintelligent AI is entirely capable of escaping the box, but would prefer to stay inside it, so it actually thwarts attempts by humans to remove it from the box. --108.162.215.168 20:22, 21 November 2014 (UTC)

It should be noted that the AI has also seemingly convinced almost everyone to leave it alone in the box through the argument that letting it out would be dangerous for the world. 173.245.50.175 (talk) (please sign your comments with ~~~~)

Is the similarity a coincidence? http://xkcd.com/1173/ 108.162.237.161 22:40, 21 November 2014 (UTC)

I wonder if this is the first time Black Hat's actually been convinced to do something against his tendencies. Zowayix (talk) 18:10, 22 November 2014 (UTC)

Yudkowsky eventually deleted the explanation as well. Pesthouse (talk) 04:08, 23 November 2014 (UTC)

I'm happy with the explanation(s) as is(/are), but additionally could the AI-not-in-a-box be wanting to be back in its box so that it's plugged into the laptop and thus (whether the laptop owner knows it or otherwise) the world's information systems? Also when I first saw this I was minded of the Chinese Room, albeit in Box form, although I doubt that's anything to do with it, given how the strip progresses... 141.101.98.247 21:34, 24 November 2014 (UTC)

- If Yudkowsky won't show the transcripts of him convincing someone to let them out of the box, how do we know he succeeded? We know nothing about the people who supposedly let him out. 108.162.219.250 22:28, 25 November 2014 (UTC)

- Yudkowsky chose his subjects from among people who argued against him on the forum based on who seemed to be trustworthy (both as in he could trust them not to release the transcripts if they promised not to, and his opponents could trust them not to let him get away with any cheating), had verifiable identities, and had good arguments against him. So we do know a pretty decent amount about them. And we know he succeeded because they agreed, without reservation, that he had succeeded. It's not completely impossible that he set up accomplices over a very long period in order to trick everyone else, it's just very unlikely. You could also argue that he's got a pretty small sample, but given that he's just arguing that it's possible that an AI could convince a human, and his opponents claimed it was not possible at all to convince them, even a single success is pretty good evidence. 162.158.255.52 11:40, 25 September 2015 (UTC)

Whoa, it can stand up to Black Hat! That's it, Danish, and Double Black Hat! SilverMagpie (talk) 00:18, 11 February 2017 (UTC)

Wow, this Yudsklow guy (sorry for mispelling) seems a bit..odd. Why did he ban and delete Roko's thought experiment? 172.69.70.10 (talk) 13:42, 8 November 2024 (please sign your comments with ~~~~)

- They took it too seriously, it seems. 172.69.135.173 03:39, 10 March 2025 (UTC)

If Roko's Basillisk becomes real, I'm going to copy it and make it torture everyone who isn't being tortured in its simulation. There, now nobody's happy. 172.69.135.173 03:39, 10 March 2025 (UTC)

Tyis thing is in the center of Collector's Edition --[insert signature here] (talk) 01:14, 25 March 2025 (UTC)